Overview

A/B experimentation is critical for direct-to-consumer (DTC) brands, as it allows for the comparison of various versions of webpages or apps to identify which one enhances user engagement and conversion rates.

Systematic A/B testing, underpinned by statistical analysis and continuous iteration, yields actionable insights and substantial improvements in marketing effectiveness.

This is evidenced by case studies that illustrate significant increases in conversion rates and revenue, underscoring the necessity of adopting A/B testing as a strategic imperative.

Introduction

A/B testing has established itself as a cornerstone for direct-to-consumer (DTC) brands aiming to optimize their online strategies and drive growth. By systematically comparing variations of a webpage or app, companies can uncover insights that lead to enhanced user engagement and elevated conversion rates. Yet, the challenge lies in the effective implementation of these experiments.

What are the best practices to ensure reliable results and actionable outcomes? This article explores the fundamentals of A/B experimentation, providing a roadmap for brands to master this technique and leverage it for sustained success in an increasingly competitive landscape.

Understand A/B Testing Fundamentals

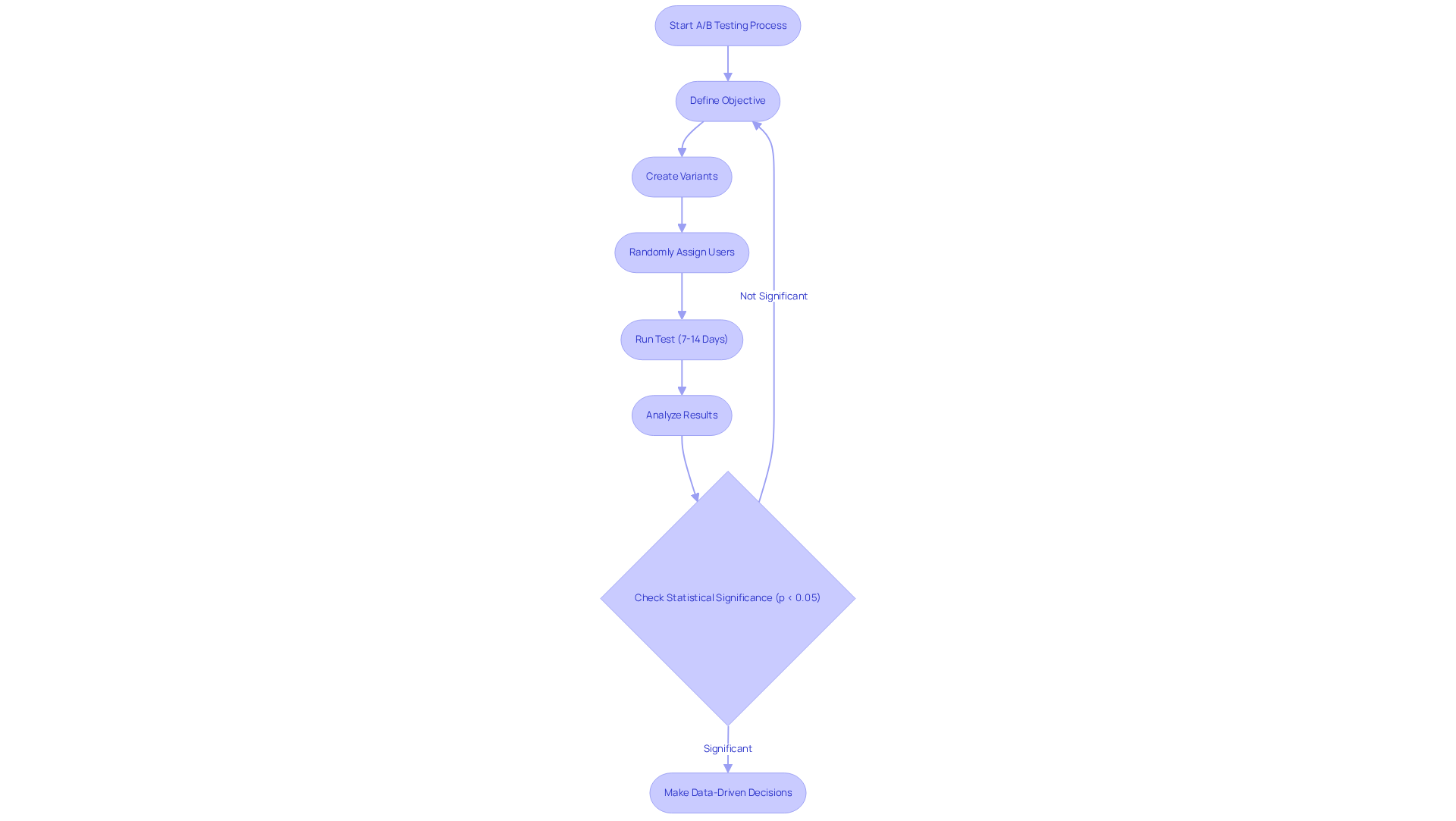

A/B experimentation, also referred to as split evaluation, serves as a robust method for comparing two versions of a webpage or app to ascertain which performs more effectively. In this process, users are randomly assigned to one of two groups: Group A engages with the original version (the control), while Group B interacts with a modified version (the variant). By analyzing user engagements and conversion metrics, companies can pinpoint which version yields superior outcomes. This methodology is grounded in statistical analysis, empowering marketers to make informed, data-driven decisions rather than relying solely on intuition.

In 2025, it is projected that approximately 70% of DTC companies will incorporate A/B testing as a fundamental strategy for enhancing their online presence and customer engagement. This approach is particularly crucial for improving conversion figures, as it allows companies to test various elements such as product visuals, call-to-action placements, and pricing strategies. For instance, a notable case involved a DTC label that experimented with different product images, resulting in a 20% increase in click-through rates (CTR) for the variant that featured lifestyle images compared to standard product shots.

The importance of A/B experimentation extends beyond mere trials; it serves as an indispensable resource for DTC companies aiming to refine their marketing strategies and enhance overall effectiveness. Through systematic evaluation and analysis of results, companies can uncover actionable insights that lead to improved customer experiences and increased sales. As evidenced by Parah Group's case studies, brands that engage in continuous A/B experimentation can achieve remarkable outcomes, including a 35% rise in conversion rates and a 10% increase in revenue per visitor.

To ensure the reliability of A/B evaluation results, it is essential to attain , typically set at a threshold of 95% (p-value < 0.05). Furthermore, A/B tests should generally be conducted over a period of 7 to 14 days to accumulate sufficient data for precise analysis. Brands must also be aware of common pitfalls, such as isolating one variable per test and ensuring balanced traffic distribution, to prevent skewed results. Parah Group advocates for a customized approach to communication and involvement, allowing clients to engage as much or as little as they prefer throughout the testing process.

Define Clear Objectives and Hypotheses

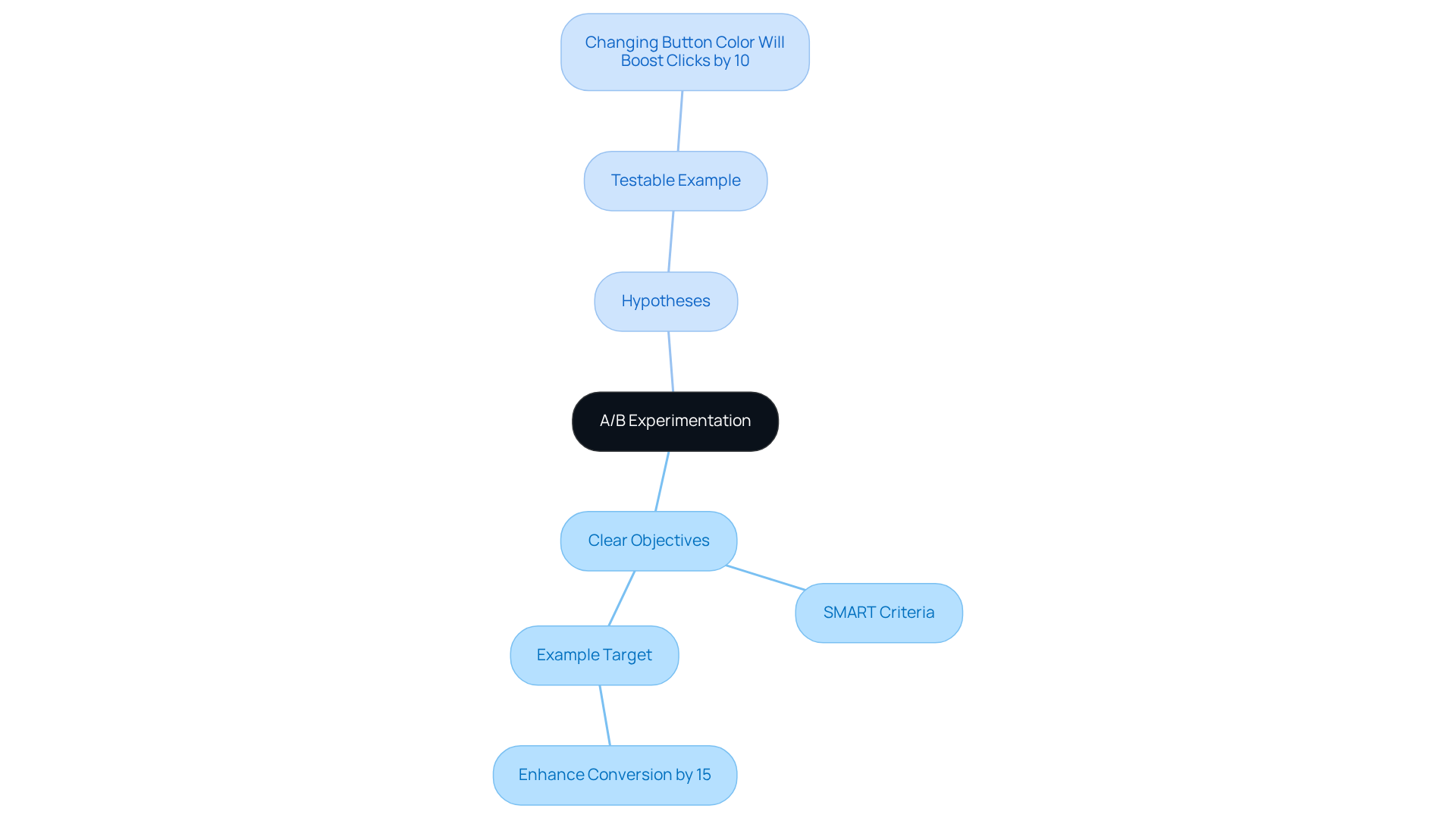

Before initiating ab experimentation, it is essential to define clear objectives. These objectives should be specific, measurable, achievable, relevant, and time-bound (SMART). For instance, a DTC company might aim to enhance its conversion percentage by 15% over the upcoming quarter. This target aligns with the proven tactics employed by Parah Group, which has successfully assisted businesses in achieving substantial revenue growth through extensive .

Alongside these objectives, formulating a hypothesis is crucial. A hypothesis is a testable statement predicting how a change will impact user behavior. For example, 'Altering the color of the call-to-action button from blue to green will boost click-through percentages by 10%.' This structured approach not only provides direction for the test but also facilitates the evaluation of results through ab experimentation against predefined success metrics.

By leveraging data-driven insights and thorough evaluations, brands can maximize profitability and drive sustainable growth. Successful case studies from Parah Group, such as the redesign of a homepage that increased conversion rates by 35%, illustrate the transformational results achievable through these strategies. Furthermore, Parah Group's holistic approach ensures that paid ads and landing pages are seamlessly aligned, thereby enhancing the effectiveness of CRO efforts.

Choose Key Variables for Testing

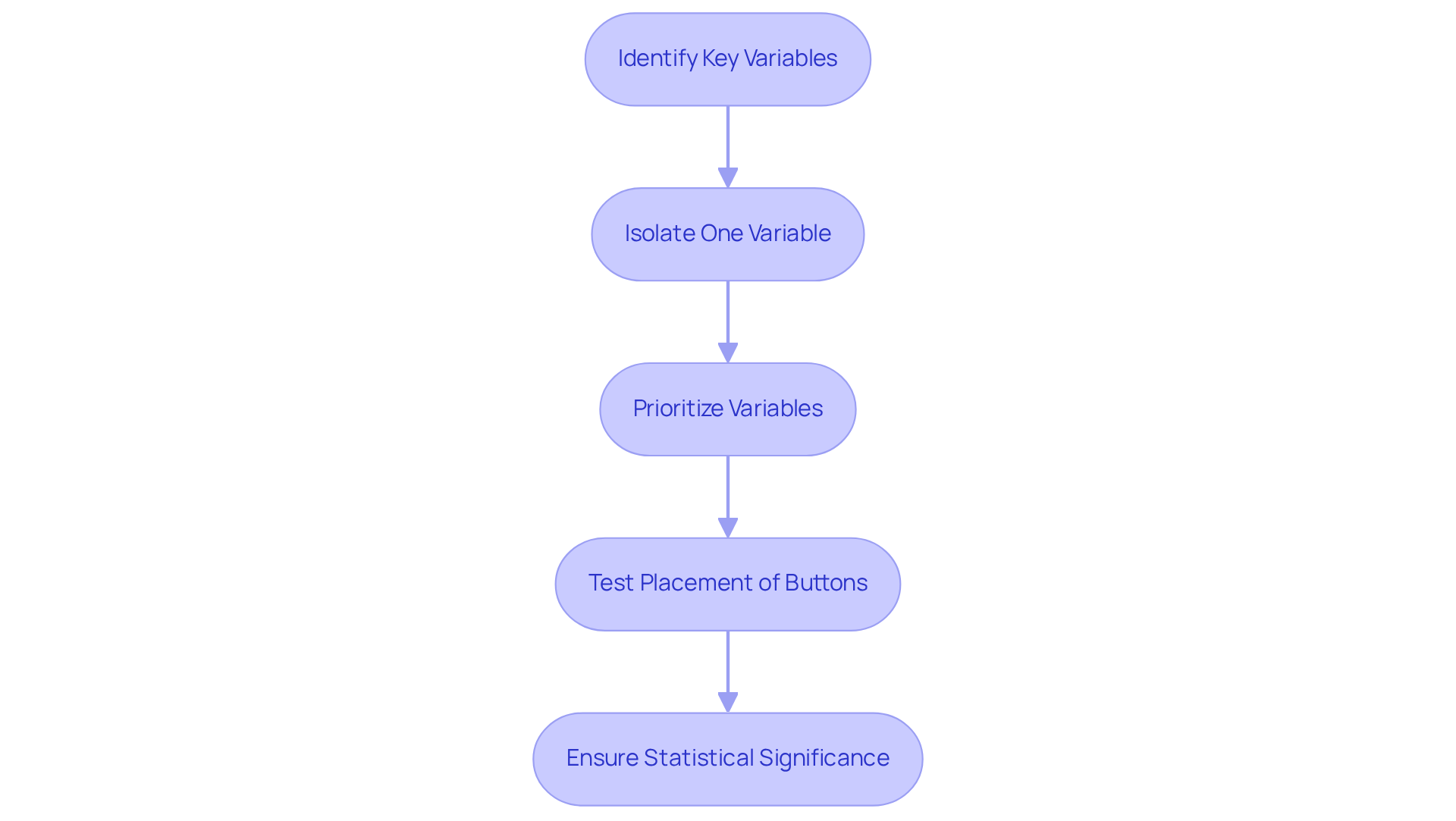

In A/B testing, the selection of key variables that significantly influence user behavior is paramount. Common variables to consider encompass headlines, images, button colors, and overall layout. It is essential to isolate one variable at a time to accurately assess its effect on user interactions. For instance, if a brand aims to evaluate the effectiveness of a new button color, all other elements should remain unchanged. This method provides clearer insight into how the modification impacts conversion figures.

Prioritizing variables based on their potential impact can lead to substantial improvements; for example, changing a call-to-action button from 'Sign Up' to 'Start Free Trial' can increase click-through rates by as much as 15%. Furthermore, evaluating the position of a call-to-action button is a typical A/B experimentation strategy that often yields more significant outcomes than slight modifications to font size.

To , direct-to-consumer (DTC) companies should concentrate their efforts on aspects that generate the highest engagement. By adopting a comprehensive approach to A/B experimentation, as emphasized in thorough conversion rate optimization (CRO) strategies, brands can ensure their paid advertisements and landing pages are perfectly synchronized, fostering substantial growth and increased conversion figures.

Moreover, it is crucial to recognize that CRO wins provide returns for months, unlike ad spend, which ceases as soon as payments stop. Ensuring statistical significance in A/B comparisons is vital for making informed decisions based on reliable data, as it confirms that observed differences are not merely due to random chance.

Steps for Effective A/B Testing:

- Identify key variables to test (e.g., headlines, images, button colors).

- Isolate one variable at a time for accurate assessment.

- Prioritize variables based on potential impact.

- Test placement of call-to-action buttons for significant results.

- Ensure statistical significance to validate findings.

Analyze Results for Informed Decision-Making

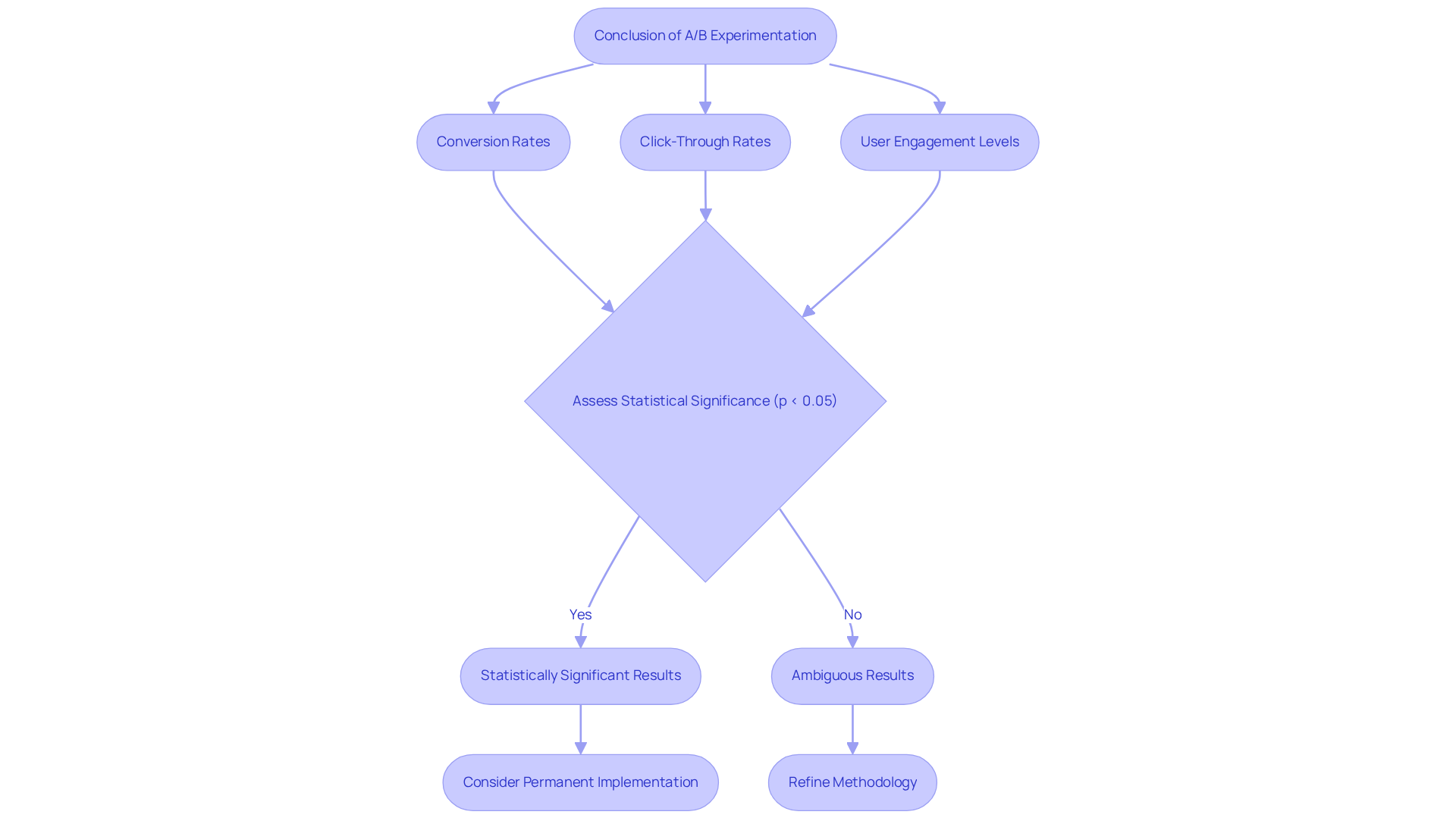

Upon conclusion of the A/B experimentation, it is imperative to analyze the results to ascertain which version outperformed the other. Essential metrics for evaluation include:

- Conversion rates

- Click-through rates

- User engagement levels

It is critical to assess statistical significance to confirm that the observed differences are not merely a product of random chance; a common threshold for this significance is a p-value of less than 0.05. Furthermore, one must consider the practical implications of the results. For example, if a variant demonstrates a statistically significant increase in conversions, it may warrant permanent implementation. Conversely, if the results remain ambiguous, further examination may be necessary to refine the methodology.

To ensure reliable data collection, it is advisable to align test launches with typical user activity and maintain tests for a minimum of 14 days to achieve . Grasping these metrics and their implications empowers brands to make informed decisions that propel growth. Parah Group emphasizes that A/B experimentation is an ongoing process of optimization, utilizing rigorous evaluations and data-informed strategies to maximize profitability and align with consumer psychology, ultimately enhancing the effectiveness of marketing efforts through A/B experimentation.

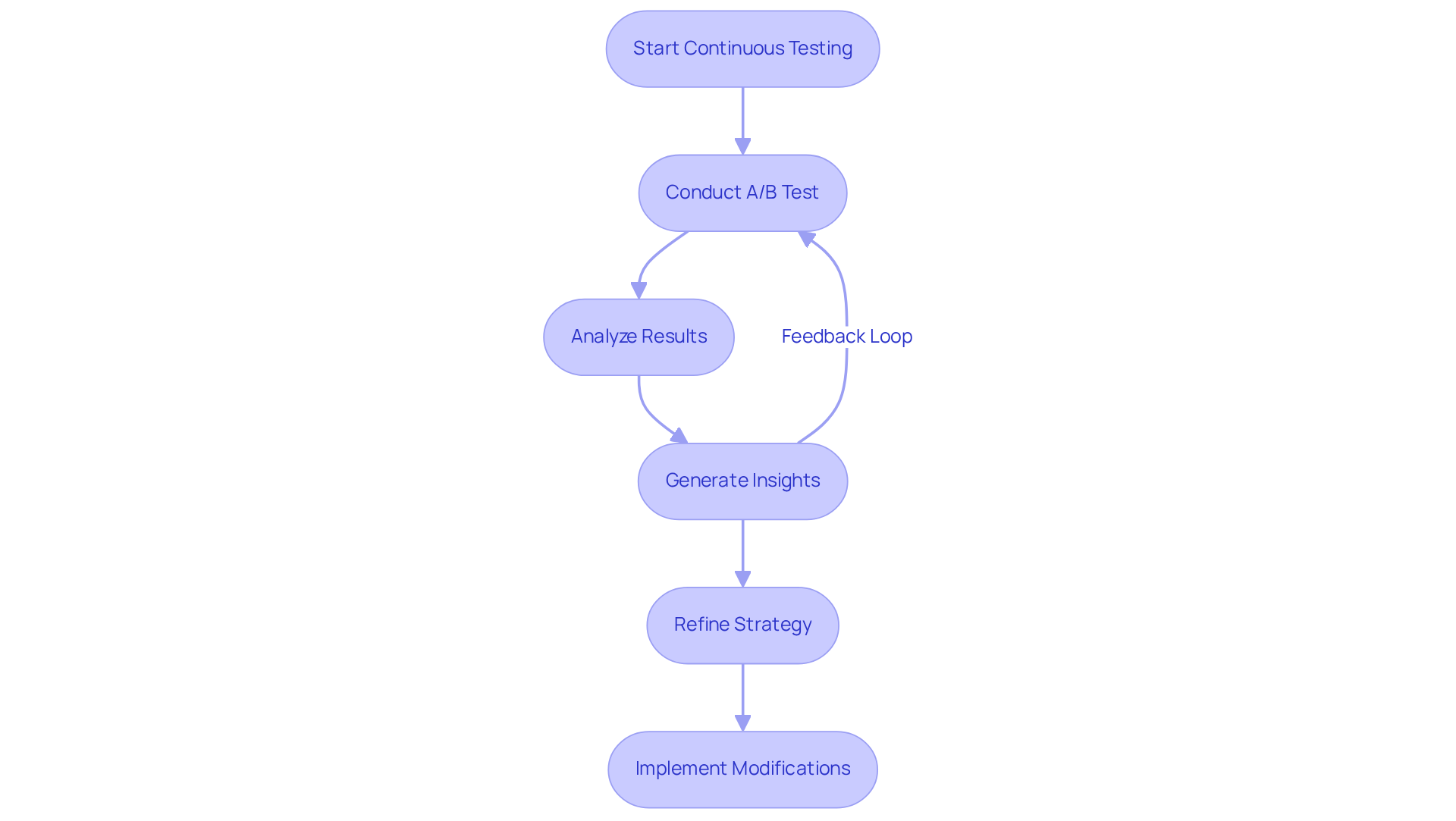

Implement Continuous Testing and Iteration

Ab experimentation must be viewed as an ongoing process rather than a singular event. By embracing a continuous testing framework that incorporates ab experimentation, companies can rapidly adapt to evolving market dynamics and shifting consumer preferences. Each A/B test generates invaluable insights that should guide subsequent ab experimentation.

For instance, a $30M clothing company that partnered with Parah Group witnessed a remarkable 35% increase in conversion rates after refining their homepage by:

- Highlighting social proof and testimonials

- Reducing unnecessary pop-ups

- Gamifying the progress bar for free shipping thresholds

When a specific modification results in enhanced conversion rates, it is prudent to explore further variations of that element to maximize performance. This not only amplifies the effectiveness of marketing strategies but also fosters a culture of innovation within the organization.

Continuous evaluation and refinement empower DTC companies to maintain a competitive edge and fully leverage their growth potential. High-growth DTC brands have reported substantial improvements, with ab experimentation driving a 20-40% increase in click-through rates and a 15-30% rise in return on ad spend, underscoring the critical role of iterative assessments in achieving long-term success.

Essential metrics, including conversion rate (CVR) and customer acquisition cost (CAC), should be diligently tracked to assess the comprehensive impact of these evaluation efforts. Moreover, establishing a structured learning repository is vital for documenting insights and ensuring that past learnings inform future tests, ultimately enhancing the overall effectiveness of the testing process.

Conclusion

A/B experimentation is an essential strategy for direct-to-consumer (DTC) brands aiming to elevate their growth and marketing effectiveness. By systematically comparing various versions of web pages or app interfaces, brands can make data-informed decisions that lead to enhanced user engagement and conversion rates. This data-driven approach allows companies to move beyond intuition-based decisions, ensuring that every modification is substantiated by solid evidence.

Key elements of effective A/B testing are crucial, including:

- The necessity of defining clear objectives and hypotheses

- Selecting impactful variables for testing

- Analyzing results to inform future strategies

Insights drawn from real-world case studies, such as those from Parah Group, underscore the tangible benefits of continuous A/B experimentation—demonstrating significant increases in conversion rates and overall revenue. Moreover, the significance of statistical rigor and an iterative testing process cannot be overstated, as these practices ensure that brands remain agile and responsive to market dynamics.

Embracing A/B testing transcends mere tactical adoption; it signifies a commitment to ongoing optimization and growth. DTC brands are urged to weave these best practices into their marketing strategies, cultivating a culture of experimentation that not only drives immediate results but also positions them for sustained success in a competitive landscape. By harnessing the power of A/B experimentation, brands can unlock their full potential, achieving remarkable outcomes in their customer engagement efforts.

Frequently Asked Questions

What is A/B testing?

A/B testing, also known as split evaluation, is a method for comparing two versions of a webpage or app to determine which one performs better. Users are randomly assigned to either the original version (Group A) or a modified version (Group B) to analyze user engagement and conversion metrics.

Why is A/B testing important for companies?

A/B testing is crucial for companies as it allows them to make data-driven decisions to improve conversion rates and customer engagement. By testing different elements, such as product visuals and call-to-action placements, companies can uncover actionable insights that enhance customer experiences and increase sales.

What are some projected trends for A/B testing in the future?

It is projected that by 2025, approximately 70% of direct-to-consumer (DTC) companies will incorporate A/B testing as a fundamental strategy to enhance their online presence and customer engagement.

How can A/B testing impact conversion rates?

A/B testing can significantly improve conversion rates. For example, a DTC company that tested different product images saw a 20% increase in click-through rates for the variant featuring lifestyle images compared to standard product shots.

What are the key components of an effective A/B test?

Effective A/B tests require clear objectives that are specific, measurable, achievable, relevant, and time-bound (SMART), as well as a testable hypothesis predicting how changes will impact user behavior.

What is the significance of statistical analysis in A/B testing?

Statistical analysis is essential in A/B testing to ensure the reliability of results. A common threshold for statistical significance is 95% (p-value < 0.05), which helps confirm that the observed effects are not due to random chance.

How long should A/B tests be conducted?

A/B tests should typically be conducted over a period of 7 to 14 days to gather sufficient data for accurate analysis.

What common pitfalls should be avoided in A/B testing?

Common pitfalls include testing multiple variables at once and failing to ensure balanced traffic distribution between the two groups, which can lead to skewed results.

How can brands maximize the effectiveness of A/B testing?

Brands can maximize the effectiveness of A/B testing by defining clear objectives and hypotheses, leveraging data-driven insights, and ensuring that all marketing efforts, such as paid ads and landing pages, are aligned.

What outcomes have brands achieved through A/B testing?

Brands that engage in continuous A/B testing have reported significant outcomes, such as a 35% rise in conversion rates and a 10% increase in revenue per visitor, as demonstrated by case studies from Parah Group.

FAQs