Overview

A/B testing, commonly referred to as split experimentation, serves as a vital method for DTC brand owners to evaluate two versions of a webpage or app, thereby determining which one exhibits superior conversion effectiveness. This technique empowers brands to make data-driven decisions, moving away from mere assumptions. By leveraging A/B testing, brands can significantly enhance user experience and drive sales through strategic improvements.

Introduction

A/B testing has emerged as a cornerstone strategy for direct-to-consumer (DTC) brands aiming to optimize their online presence and enhance conversion rates. By comparing two variations of a webpage or app, brands can leverage data-driven insights to make informed decisions that enhance user experience and drive sales. However, many brand owners encounter challenges in implementing effective A/B testing practices, often resulting in missed opportunities for growth.

What are the key elements that can transform A/B testing from a mere experiment into a powerful tool for sustainable success?

Define A/B Testing: Core Concepts and Importance

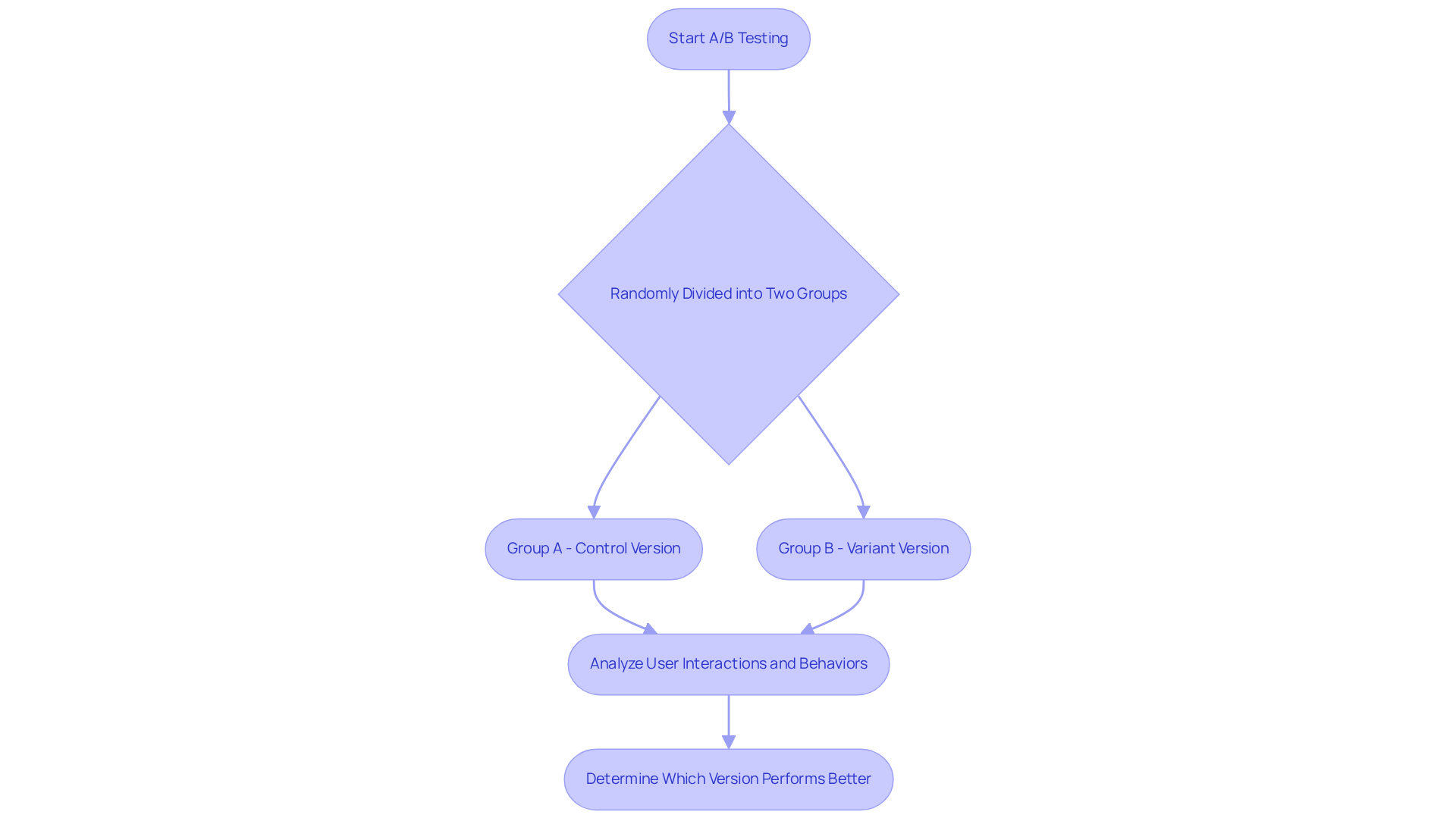

The ab testing definition refers to a powerful technique, also known as split experimentation, used to compare two versions of a webpage or app to determine which one performs better in terms of conversion effectiveness. The fundamental concept involves randomly dividing your audience into two groups: one group experiences version A (the control), while the other engages with version B (the variant). By meticulously analyzing user interactions and behaviors, brands can uncover which version yields superior engagement and conversion rates.

The significance of the ab testing definition lies in its capacity to provide empirical evidence for informed decision-making. Rather than relying on assumptions or intuition, DTC brands can harness data to implement strategic improvements that enhance user experience and drive sales. This method not only optimizes existing resources but also mitigates the risk of introducing changes that could adversely affect performance. At Parah Group, we regard A/B analysis as an essential element of our (CRO) strategies, ensuring our clients achieve sustainable growth and increased profitability through data-driven insights.

Implement A/B Testing: Step-by-Step Guide for DTC Brands

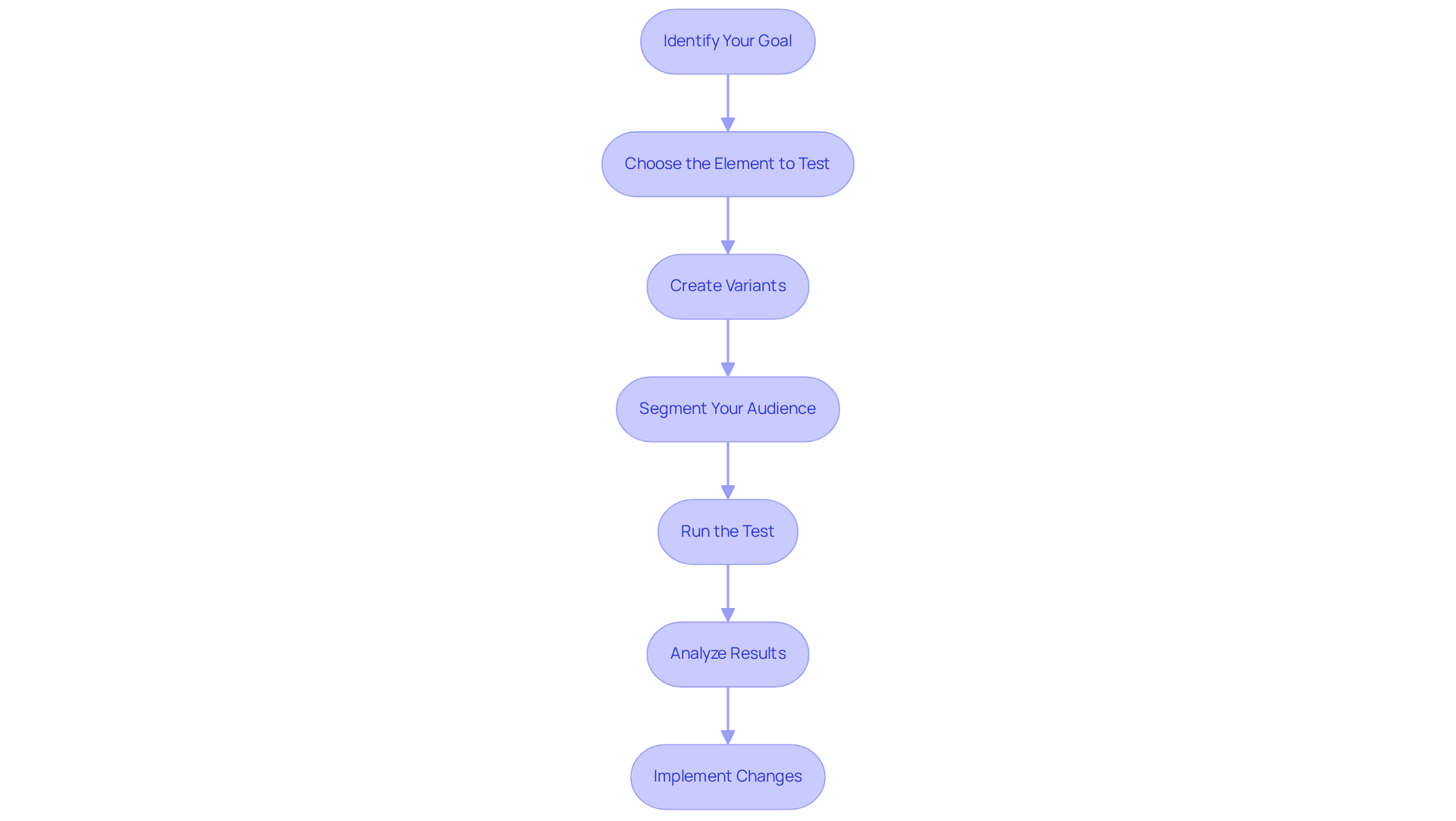

- Identify Your Goal: Clearly define the objective of your A/B test. This could involve increasing conversion figures, enhancing click-through statistics, or boosting user interaction. Establishing a specific goal will guide your testing process and help measure success, reflecting Parah Group's commitment to profitability and sustainable growth.

- Choose the Element to Test: Select a particular element on your webpage to focus on, such as headlines, call-to-action buttons, images, or layout. For instance, changing a headline can lead to significant improvements; one study found that a new tagline resulted in an 8.2% increase in click-throughs. Parah Group highlights that even minor adjustments can have a significant effect, such as incorporating a live chat feature, which can boost conversion rates by 40%.

- Create Variants: Develop two distinct versions of the chosen element. Ensure that the modifications are substantial enough to influence user behavior but not so drastic that they create confusion. For instance, a straightforward modification in button text from 'add to bag' to 'add to cart' led to an 81.4% rise in purchases for a particular e-commerce store, demonstrating the transformative potential of data-driven strategies.

- Segment Your Audience: Randomly divide your audience into two groups to ensure statistically valid results. This segmentation is essential for precisely evaluating the influence of the modifications, reflecting Parah Group's that optimizes existing resources and aligns with client requirements.

- Run the Test: Launch the A/B testing definition and allow it to run for a sufficient duration to collect meaningful data. The duration of the test should be influenced by your traffic levels and the importance of the variations being assessed, ensuring that your strategies are data-driven and aligned with sustainable growth.

- Analyze Results: After the test concludes, analyze the data to identify which version performed better. Focus on key metrics such as conversion rates, bounce rates, and user engagement. For instance, dedicated landing pages tailored to specific audiences can significantly improve ad campaign performance, demonstrating the effectiveness of targeted changes that Parah Group advocates.

- Implement Changes: Based on the analysis, implement the winning variant and continue to monitor its performance over time. Continuous evaluation is essential; A/B experimentation should be a continuous process to refine and enhance your marketing strategies. As Elizabeth Levitan, a Digital Optimization Specialist, observed, the opportunities for experimentation are endless. Remember, insights obtained from A/B experiments illustrate the A/B testing definition and can lead to innovative solutions and enhancements in customer experience, reinforcing the significance of a strong CRO program. Significantly, around 75% of the top 500 online retailers employ A/B evaluation platforms, highlighting its importance in the sector.

Identify Key Elements to Test: Maximizing Impact on Conversion Rates

When conducting A/B experiments, it is imperative to understand the ab testing definition and focus on pivotal factors that significantly influence . Consider these essential areas:

- Headlines: As the first element visitors encounter, a captivating headline is vital for capturing attention and encouraging further exploration.

- Call-to-Action (CTA) Buttons: Experiment with various wording, colors, and placements to identify what drives the highest click-through rates.

- Images and Visuals: Test different images or graphics to ascertain which ones resonate most effectively with your target audience.

- Page Layout: The organization of elements on your page can profoundly impact user navigation and engagement.

- Pricing Strategies: Experiment with diverse pricing displays, discounts, or promotional offers to determine what maximizes conversions.

- Trust Signals: Integrate elements such as testimonials, reviews, or security badges to enhance credibility and foster trust with potential customers.

Avoid Common Mistakes: Best Practices for Effective A/B Testing

To maximize the effectiveness of your A/B testing, it is essential to implement best practices that reflect Parah Group's comprehensive approach to , honed over a decade of expertise in the field.

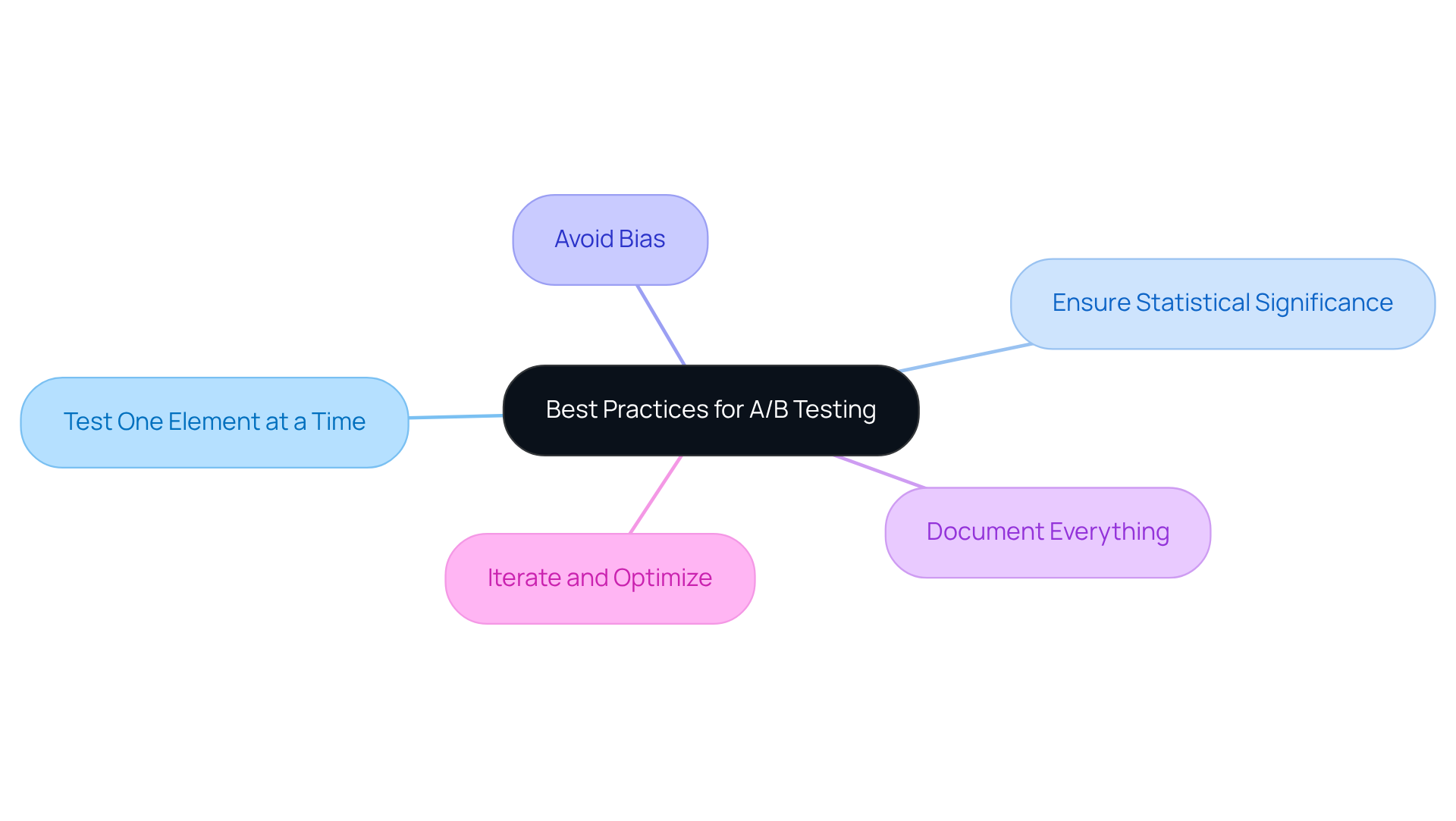

- Test One Element at a Time: Testing multiple changes simultaneously can obscure the understanding of which alteration influenced the observed results. Concentrate on isolating variables to accurately assess their individual impact on conversion rates.

- Ensure Statistical Significance: Conduct tests over a sufficient duration to collect enough data for reliable conclusions. Employ statistical analysis to validate that results are not merely coincidental, ensuring your findings are both robust and actionable.

- Avoid Bias: It is crucial to randomly assign audience segments to prevent skewed results. This practice accurately reflects consumer behavior and preferences, which is vital for effective CRO.

- Document Everything: Maintain thorough records of your tests, encompassing hypotheses, variations, and results. This documentation will inform future evaluation strategies and enhance your approach based on past insights.

- Iterate and Optimize: Leverage insights gained from A/B evaluations to continuously refine your strategy. Testing should be an ongoing endeavor, not a one-off task. Acting as growth consultants, Parah Group underscores the significance of high-velocity testing to optimize existing resources and drive substantial growth. Remember, the long-term advantages of CRO can lead to sustained profitability, establishing it as a crucial strategy for DTC brands.

Conclusion

A/B testing is an indispensable strategy for DTC brands, empowering them to make data-driven decisions that elevate user engagement and conversion rates. By comparing two versions of a webpage or app, brands can pinpoint which elements resonate most effectively with their audience, ultimately driving enhanced performance and profitability. This empirical approach not only cultivates a deeper understanding of customer preferences but also mitigates the risks associated with altering marketing strategies.

Throughout this article, we have underscored key insights into the A/B testing process, emphasizing the necessity of establishing clear goals, selecting specific elements to test, and ensuring statistical significance in results. Adhering to best practices—such as testing one variable at a time and maintaining meticulous documentation—is vital for maximizing the effectiveness of A/B testing. Moreover, grasping pivotal factors like headlines, call-to-action buttons, and trust signals can profoundly impact conversion rates, thereby reinforcing the importance of a structured testing approach.

In conclusion, the importance of A/B testing in the domain of direct-to-consumer marketing cannot be overstated. Brands are urged to adopt this methodology as an ongoing process, leveraging the insights gained to refine their strategies and promote sustainable growth. By prioritizing data-driven decisions and implementing best practices, DTC brands can not only enhance their marketing effectiveness but also secure a competitive advantage in an increasingly dynamic marketplace.

Frequently Asked Questions

What is A/B testing?

A/B testing, also known as split experimentation, is a technique used to compare two versions of a webpage or app to determine which one performs better in terms of conversion effectiveness.

How does A/B testing work?

A/B testing works by randomly dividing an audience into two groups: one group experiences version A (the control), while the other engages with version B (the variant). User interactions and behaviors are then analyzed to identify which version yields better engagement and conversion rates.

Why is A/B testing important?

A/B testing is important because it provides empirical evidence for informed decision-making. It allows brands to rely on data rather than assumptions, enabling them to make strategic improvements that enhance user experience and drive sales.

How does A/B testing benefit brands?

A/B testing benefits brands by optimizing existing resources and reducing the risk of implementing changes that could negatively impact performance. It helps in achieving sustainable growth and increased profitability through data-driven insights.

What role does A/B testing play in Conversion Rate Optimization (CRO)?

A/B testing is regarded as an essential element of Conversion Rate Optimization (CRO) strategies, helping brands to improve their conversion rates and overall effectiveness in reaching their business goals.

FAQs