Overview

This article presents crucial A/B testing statistics that every direct-to-consumer (DTC) brand owner must grasp to elevate their marketing strategies and boost conversion rates.

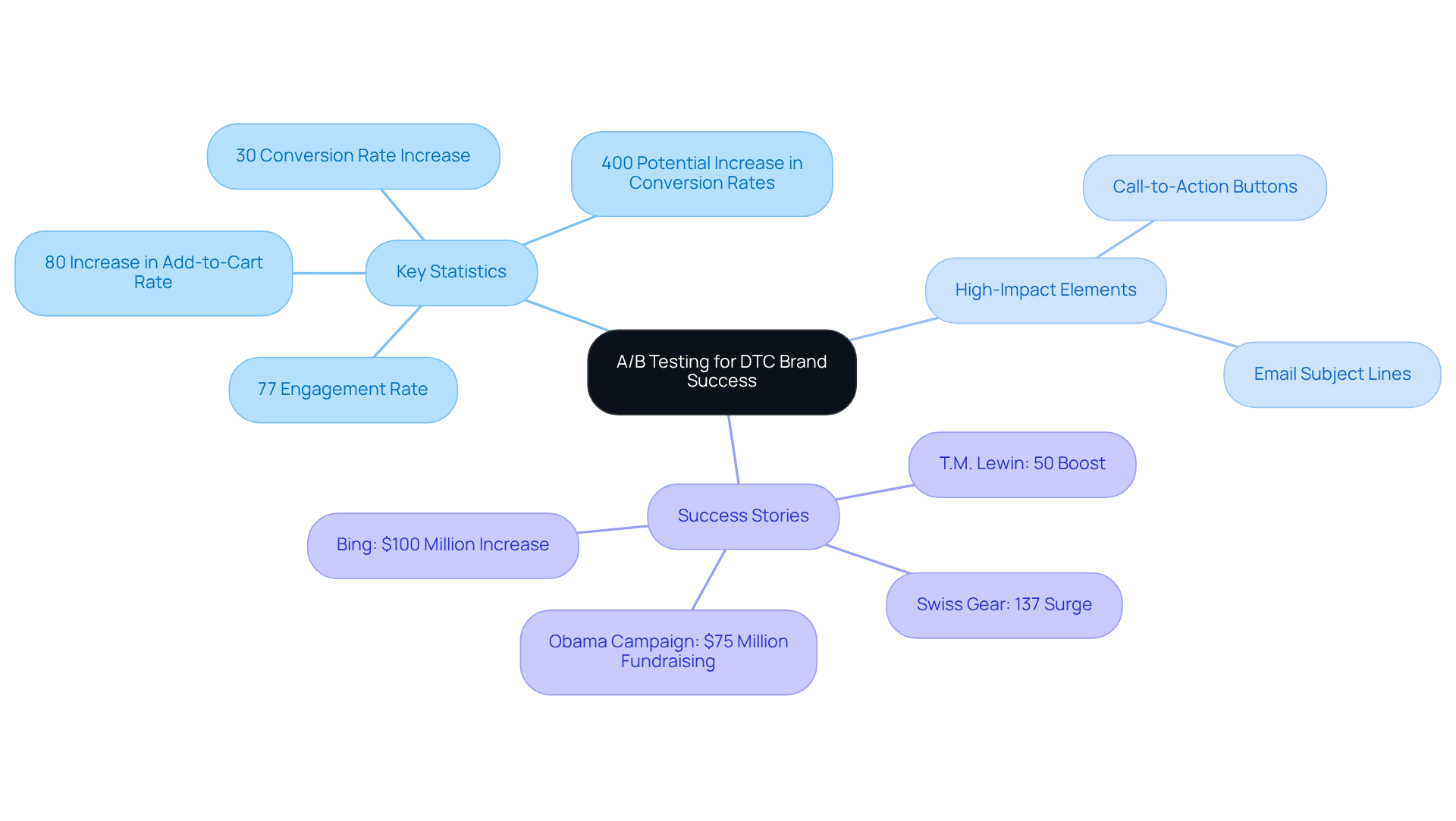

- Companies that implement A/B testing can realize conversion increases of up to 30%.

- It provides vital insights into statistical significance, sample sizes, and effective metrics that inform decision-making and enhance profitability through optimized user experiences.

Introduction

A/B testing stands as a cornerstone strategy for direct-to-consumer (DTC) brands, with compelling statistics showcasing its potential to elevate conversion rates by as much as 30%.

With 77% of organizations now embracing this powerful tool, it is imperative for brands to grasp the nuances of A/B testing to refine their marketing tactics and enhance user experiences.

Yet, amid the promise of increased engagement and sales, numerous DTC companies face the challenge of effectively implementing A/B testing methodologies.

What essential statistics and strategies can they leverage to ensure their testing efforts translate into measurable success?

Parah Group: Key A/B Testing Statistics for DTC Brand Success

A/B experimentation stands as a pivotal strategy for DTC brands, with research indicating that companies employing this method can achieve conversion rate enhancements of up to 30%. Currently, 77% of organizations are actively engaging in A/B tests, highlighting its essential role within the industry. Brands that consistently engage in A/B trials not only refine their marketing strategies but also markedly enhance user experiences.

Effective A/B statistics experimentation strategies prioritize high-impact elements such as:

This results in significant increases in engagement and sales. For example, straightforward subject lines have been shown to elicit 541% more responses than their more creative counterparts, underscoring the importance of clarity in communication. Moreover, companies like T.M. Lewin have reported a 50% boost in conversion rates through clear returns messaging, while Swiss Gear experienced an impressive 137% surge in conversion rates during peak shopping seasons by optimizing their product detail pages. These instances illustrate that can drive substantial growth and profitability for DTC companies.

Statistical Significance: The Foundation of A/B Testing Insights

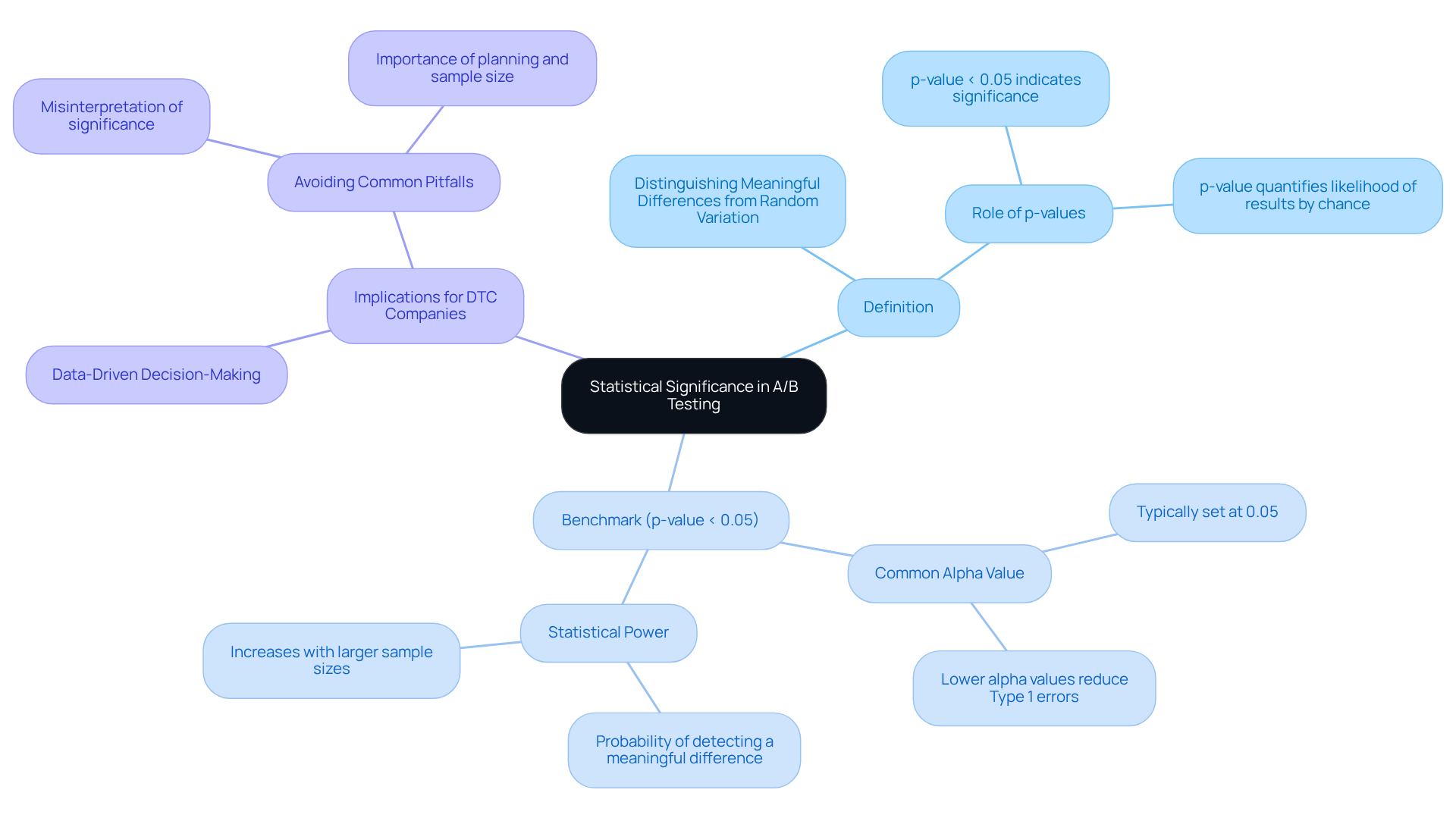

In the context of A/B statistics, statistical significance plays a pivotal role in discerning whether the outcomes of an A/B test are attributable to the changes implemented or if they arise purely from random variation. A widely recognized benchmark for significance is a p-value of less than 0.05, which signifies that there is less than a 5% probability that the observed differences occurred by chance.

This fundamental concept is essential for DTC companies, as it empowers them to validate their evaluation outcomes and make informed, . By comprehending and applying this principle, companies can analyze their A/B statistics results with confidence, ultimately leading to more effective optimization strategies.

Confidence Intervals: Assessing the Reliability of A/B Test Results

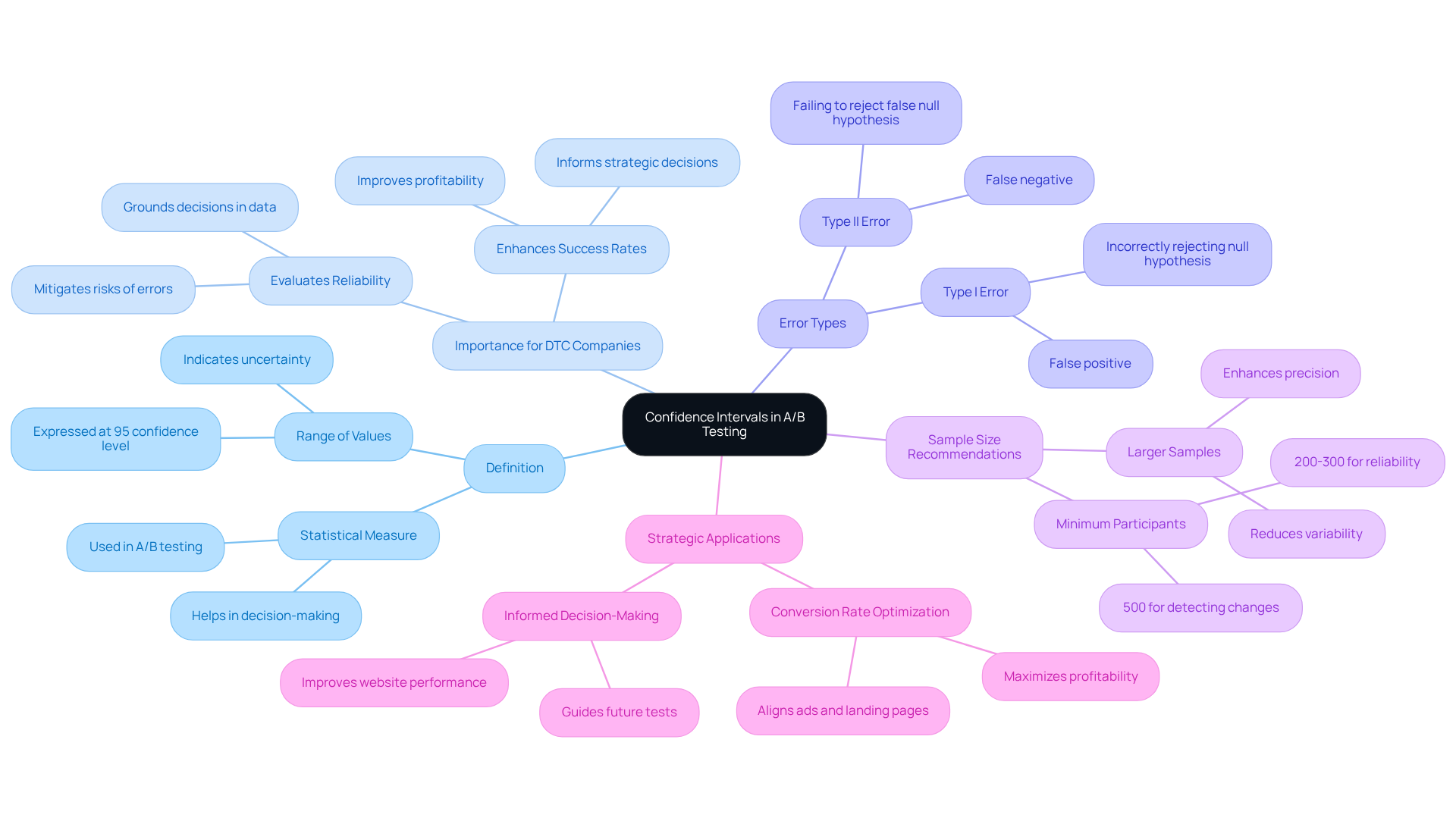

Confidence intervals are critical in defining the range within which the true effect of a change is expected to fall, typically expressed at a 95% confidence level. For example, if an A/B test shows a conversion rate increase with a confidence interval of 2% to 5%, this indicates that the actual increase is likely to reside within this range.

This statistical measure, known as a b statistics, is indispensable for direct-to-consumer (DTC) companies, as it allows them to evaluate the reliability of their results, ensuring that decisions are grounded in solid data rather than mere chance. By comprehending and applying confidence intervals, companies can more effectively assess the significance of their A/B statistics test outcomes, leading to informed and strategic business decisions.

Furthermore, confidence intervals play a vital role in mitigating the risks associated with Type I errors (incorrectly rejecting a true null hypothesis) and Type II errors (failing to reject a false null hypothesis). This understanding is paramount for DTC companies striving to enhance their success rates and increase profitability.

To bolster the reliability of A/B tests, it is recommended that companies aim for a , as larger sample sizes reduce variability and enhance the precision of confidence intervals. By leveraging these insights within a comprehensive conversion rate optimization (CRO) strategy that aligns paid ads and landing pages, DTC companies can maximize profitability and drive sustainable growth.

Average Conversion Rates: Industry Benchmarks for DTC Brands

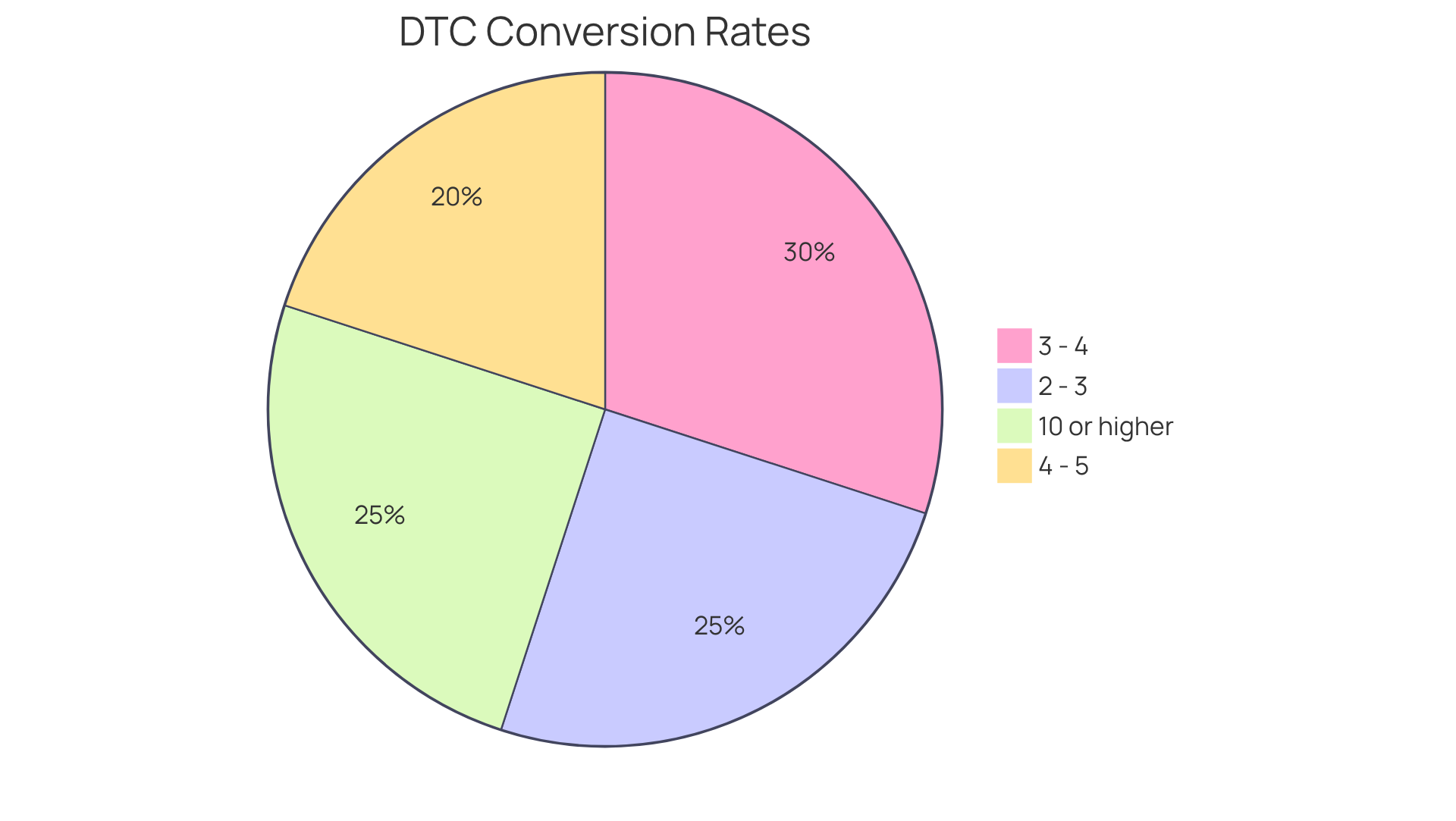

The typical rate of change for direct-to-consumer (DTC) companies generally lies between 2% and 5%, influenced by various factors such as sector and product category. For instance, online retail companies typically encounter rates of around 3.5%. Understanding these benchmarks is crucial for DTC companies, as it allows them to pinpoint performance gaps and effectively prioritize . By leveraging insights from market norms, companies can implement targeted strategies to enhance their success rates, ultimately driving increased profitability and growth. Notably, a rate of 10% or higher is recognized as a strong standard across industries.

For example:

- A $30M clothing label that partnered with Parah Group saw a remarkable 35% increase in sales rates after optimizing product pricing and gamifying the shopping experience.

- A $15M cleaning product brand boosted its average order value (AOV) by 80% through strategic A/B testing of free shipping thresholds and product bundling.

These transformational case studies underscore the potential for DTC companies to achieve significant improvements in their success rates through innovative conversion rate optimization (CRO) strategies. Continuous enhancement efforts are essential, as brands should strive to elevate their success rates beyond initial benchmarks.

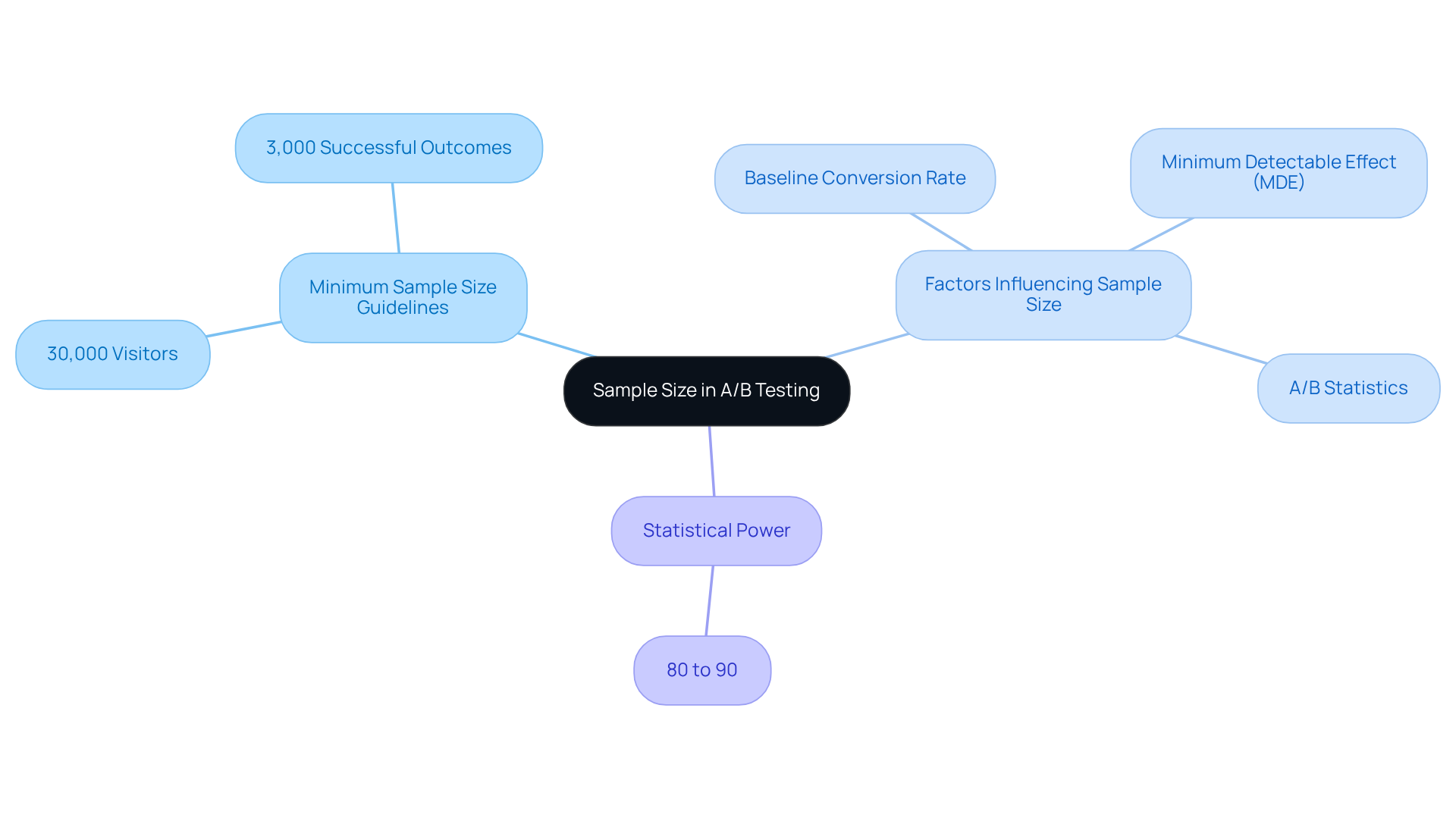

Sample Size: Ensuring Validity in A/B Testing Results

To achieve statistically valid results, must involve a sufficiently large sample size. A general guideline dictates striving for a minimum of 30,000 visitors and 3,000 successful outcomes per variant. This approach ensures that results are not skewed by random variation, allowing findings to be confidently applied to a broader audience.

Furthermore, determining the appropriate sample size necessitates consideration of factors such as:

- The baseline conversion rate

- The minimum detectable effect (MDE)

- A/B statistics

The desired statistical power is typically set between 80% and 90%.

At Parah Group, we assert that effective A/B experimentation is a crucial component of a comprehensive Conversion Rate Optimization strategy. By leveraging consumer psychology and rigorous evaluation methods, we empower DTC companies to maximize profitability and foster substantial growth through informed decision-making.

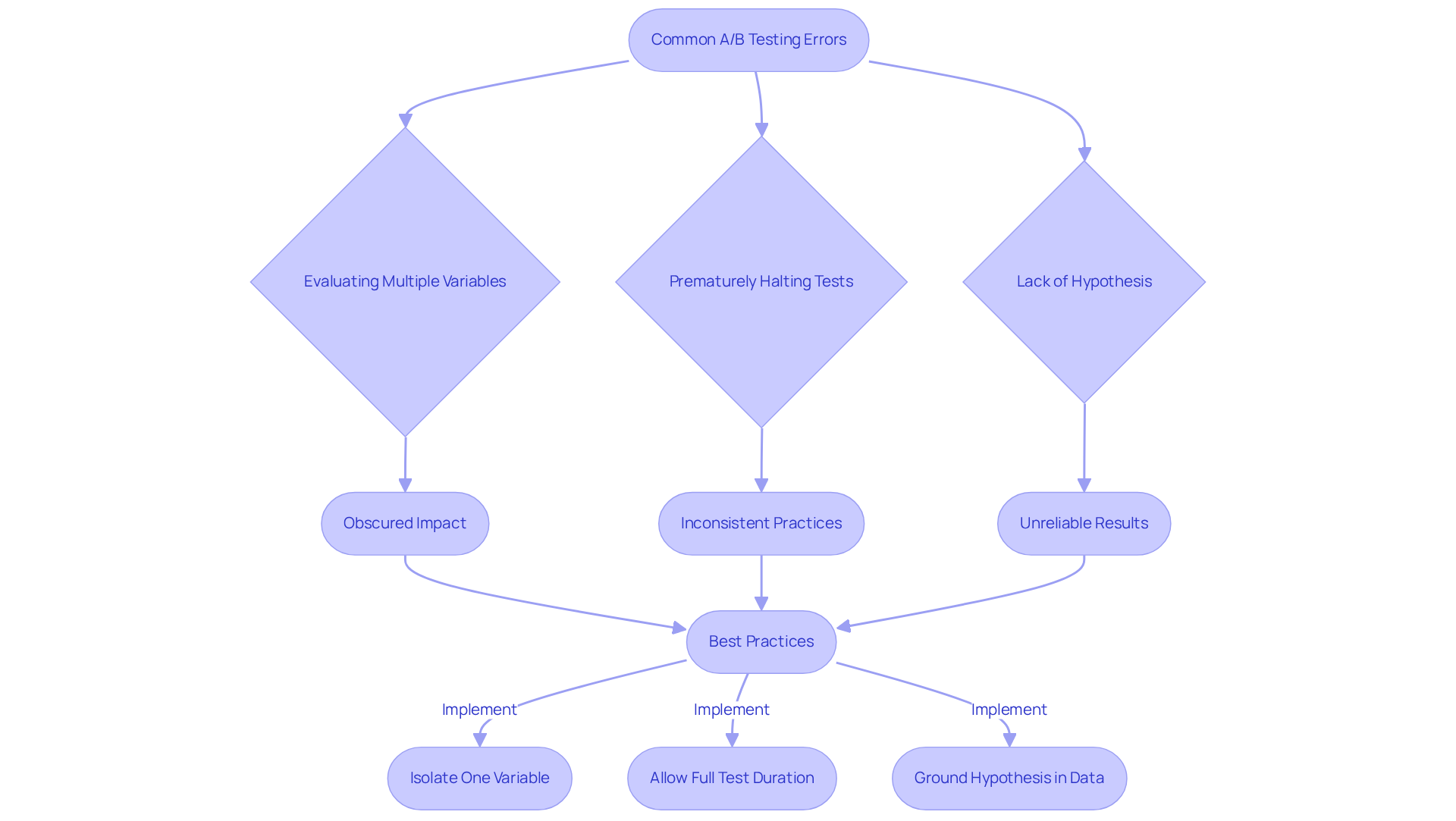

Common A/B Testing Errors: What DTC Brands Should Avoid

DTC companies often encounter common A/B statistics mistakes that can undermine their optimization efforts. A significant error is evaluating multiple variables simultaneously, which obscures the true impact of each change. Instead, companies should isolate one variable per test to clearly identify its effects on user behavior and success rates. This method is crucial for firms like those Parah Group has partnered with, where strategic evaluation has led to substantial improvements, including:

- A 35% increase in conversion rates

- A 10% rise in revenue per visitor

Furthermore, a clearly articulated hypothesis is vital; evaluations should be grounded in data and insights rather than assumptions to ensure that the evaluation process is strategic and relevant to the customer journey.

Another critical misstep is prematurely halting tests before achieving statistical significance. A/B tests require a sample size of at least 25,000 visitors to produce reliable results, according to A B statistics, and many tests fail due to insufficient data. Parah Group underscores the importance of allowing tests to run their full course, which not only enhances the quality of insights but also helps avoid misleading conclusions. By focusing on these optimal methods, DTC companies can significantly improve their A/B statistics and attain better results.

Additionally, it is essential to recognize that 52.8% of CRO professionals lack a standardized stopping point for A/B trials, potentially leading to inconsistent practices. To ensure adequate traffic for dependable outcomes, companies should consider as a practical takeaway—a strategy that has proven effective in Parah Group's case studies, where optimized evaluations have resulted in notable revenue growth.

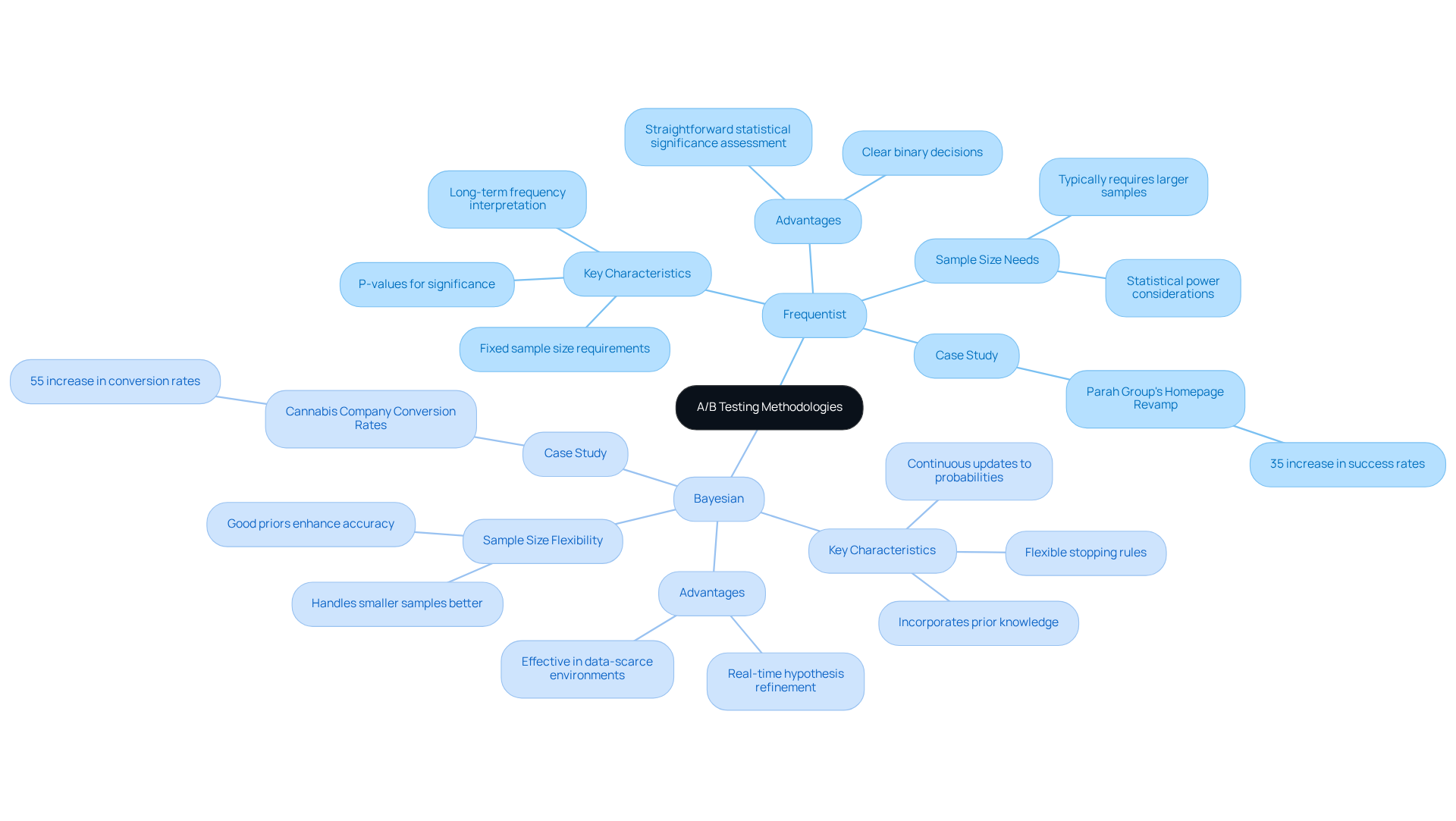

Frequentist vs. Bayesian: Choosing the Right A/B Testing Methodology

Frequentist and Bayesian methodologies present distinct frameworks for A/B statistics evaluation, each offering unique advantages. The Frequentist approach is anchored in long-term frequencies and fixed probabilities, emphasizing statistical significance through p-values, which typically require a threshold of below 0.05 to establish significance. This methodology generally demands larger sample sizes to yield reliable results, making it ideal for straightforward, repeatable experiments. For instance, a $30M clothing line that collaborated with Parah Group saw a 35% increase in success rates after implementing a revamped homepage based on A/B testing outcomes, showcasing the efficacy of the Frequentist method in driving substantial improvements.

In contrast, the Bayesian approach incorporates prior knowledge, enabling continuous updates to probabilities as new data is acquired. This adaptability allows DTC companies to refine their hypotheses based on real-time insights, proving particularly effective in data-scarce environments, where valuable insights can emerge even from smaller sample sizes. A case study involving a rapidly expanding cannabis company illustrated a 55% increase in conversion rates through the application of Bayesian methods, underscoring how this approach can lead to significant gains in profitability.

Moreover, Bayesian methodologies permit flexible stopping rules, facilitating timely decision-making grounded in accumulating evidence. When selecting an , DTC companies must assess their specific goals, data accessibility, and the necessity of integrating prior assumptions into their evaluation strategies. Success narratives from Parah Group exemplify how companies have effectively leveraged both methodologies to enhance their marketing strategies, emphasizing the importance of choosing the appropriate method based on context and desired outcomes, while also considering potential biases in Bayesian analysis stemming from reliance on prior knowledge.

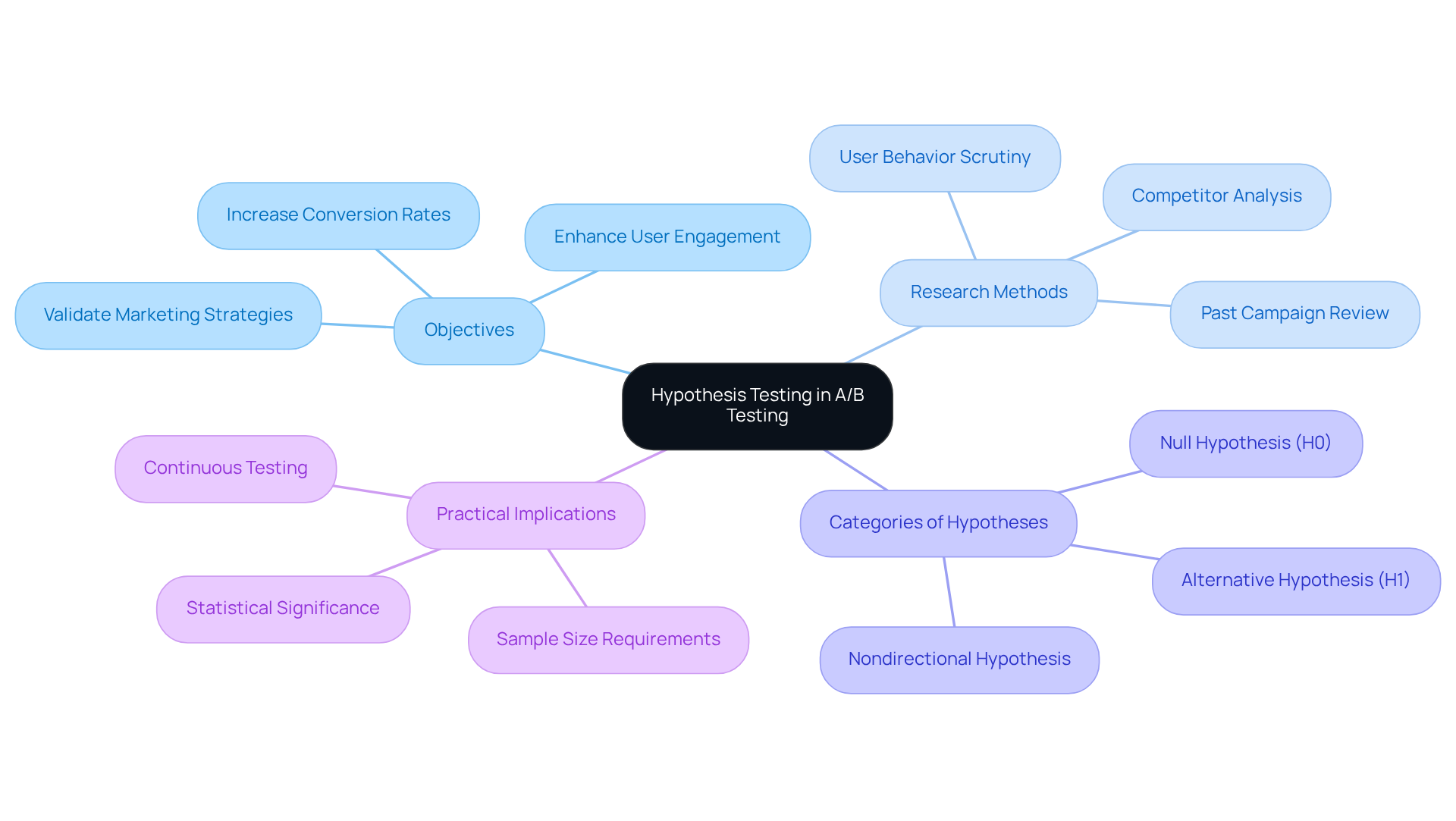

Hypothesis Testing: Defining Objectives in A/B Testing

Hypothesis evaluation serves as a cornerstone in A/B statistics, providing a systematic framework for effective experimentation. For direct-to-consumer (DTC) companies, crafting precise hypotheses is essential; it directs the focus of evaluations and heightens the potential for actionable insights. Consider a hypothesis like 'Changing the color of the call-to-action button will increase click-through rates by 10%.' This sets a measurable objective that can be effectively assessed.

To develop , brands must engage in preliminary research and data analysis. This process may involve:

- Scrutinizing user behavior

- Analyzing competitor strategies

- Reviewing past campaign performance

A well-articulated hypothesis not only clarifies the experiment's objective but also aids in interpreting the results. For instance, Techinsurance.com achieved a remarkable 73% increase in conversion rates after implementing a dedicated landing page based on targeted messaging, showcasing the effectiveness of focused hypotheses.

Moreover, understanding the categories of hypotheses—such as the Null Hypothesis (H0), which posits no significant difference, and the Alternative Hypothesis (H1), which indicates a significant difference—can enhance the evaluation strategies for DTC companies. By ensuring that hypotheses are specific and measurable, companies can more effectively assess the impact of modifications made during A/B statistics experiments.

In practice, DTC companies should aim for a minimum of 1,000 transactions per variation to achieve reliable statistical significance. This benchmark facilitates a robust analysis of results, ensuring that any observed changes are not merely coincidental. By continuously refining their hypotheses and evaluation techniques, companies can uncover valuable insights that enhance optimization rates and drive overall profitability.

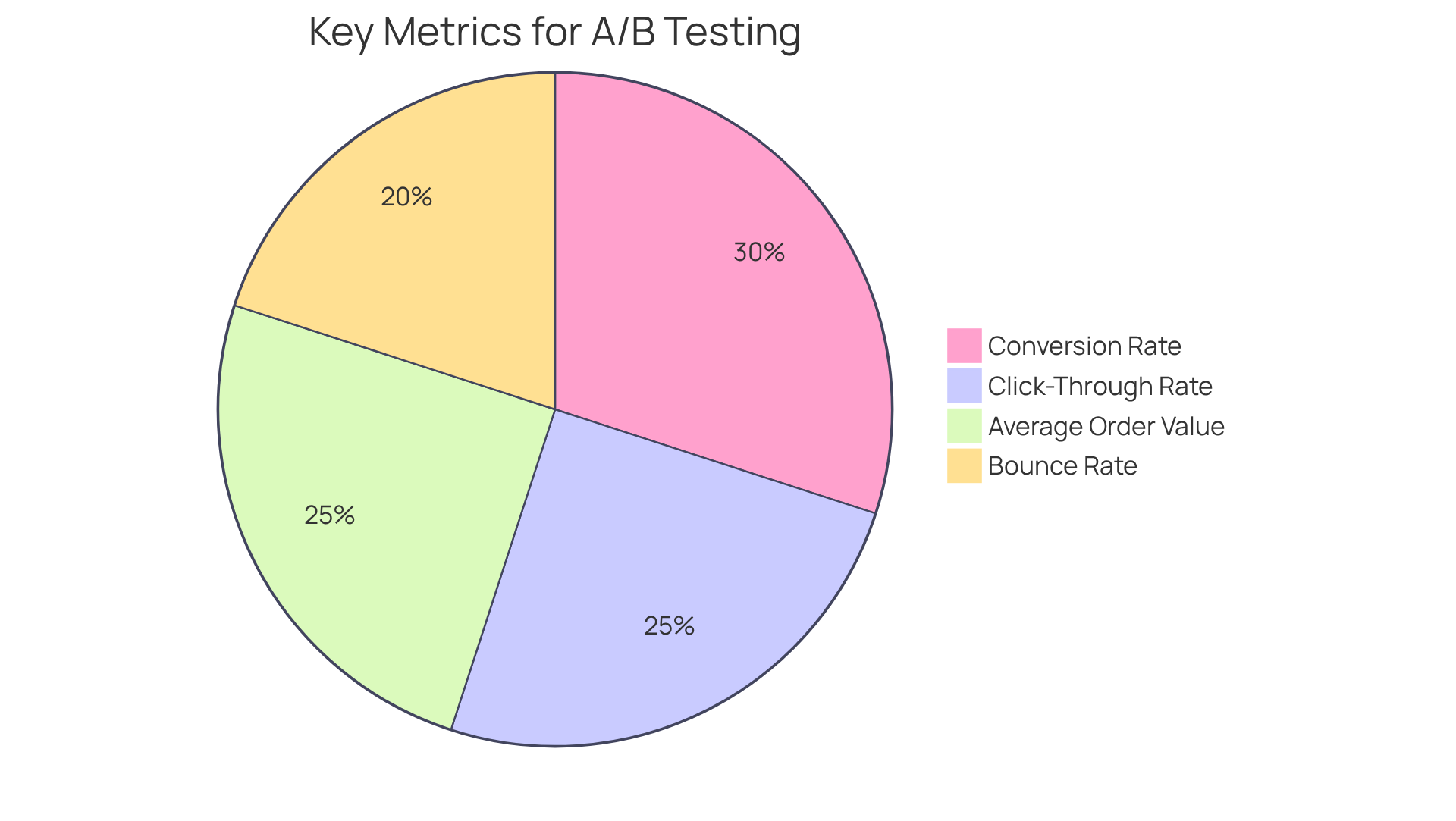

Key Metrics: What DTC Brands Should Track in A/B Testing

DTC brands must prioritize several critical metrics during A/B testing to effectively gauge A/B statistics for measuring success. Key metrics include:

- Conversion Rate: This metric measures the percentage of visitors who complete a desired action, such as making a purchase. An increase in engagement rate indicates that the modifications implemented resonate with users.

- Click-Through Rate (CTR): This tracks the percentage of users who click on a specific link compared to the total number of users who view the page. A low CTR may signal issues with calls to action or content relevance.

- Bounce Rate: This indicates the percentage of visitors who leave the site after viewing only one page. A high bounce rate suggests that the landing page lacks engagement or that users are not finding what they expected.

- Average Order Value (AOV): AOV measures the average amount spent by customers per transaction. Increasing AOV can significantly enhance profitability, making it essential to track alongside sales rates.

By closely monitoring these metrics, DTC companies can gain valuable insights into user behavior and the effectiveness of their A/B statistics testing strategies. For instance, a case study involving a DTC company that optimized its landing page design resulted in a 25% increase in conversion rates and a 15% boost in AOV, demonstrating the impact of targeted changes. Furthermore, statistical significance should always be verified, with a common threshold set at a p-value of less than 0.05, ensuring that observed changes are not due to random chance. This enables companies to refine their strategies and achieve sustainable growth.

Case Studies: Successful A/B Testing Strategies for DTC Brands

Many direct-to-consumer (DTC) companies have effectively harnessed A/B statistics evaluations to significantly enhance their performance. A compelling example is a $30 million clothing label that partnered with Parah Group, achieving a remarkable 35% increase in sales by revamping their homepage to highlight social proof and optimizing product pricing. Similarly, Grab Green, a cleaning product company with $15 million in revenue, boosted their average order value (AOV) by 80% through strategic A/B evaluations of free shipping thresholds and product bundles.

These case studies underscore the substantial advantages of implementing structured A/B statistics evaluation strategies, illustrating how data-informed decisions can enhance customer engagement and boost conversion rates. Furthermore, STRNG Seeds, a rapidly growing DTC cannabis company, reported a staggering 90% increase in AOV by introducing multi-packs and custom landing pages, exemplifying the transformative potential of innovative conversion rate optimization (CRO) strategies.

Such instances highlight the critical role of A/B evaluation in refining e-commerce strategies and driving revenue growth. With 77% of companies conducting A/B statistics experiments on their websites, it is imperative for DTC business owners to acknowledge the importance of achieving a to ensure statistical relevance. To fully leverage the benefits of A/B testing, brands must continuously analyze their results and adapt their strategies accordingly.

Conclusion

A/B testing stands as a cornerstone strategy for direct-to-consumer (DTC) brands striving to refine their marketing initiatives and elevate conversion rates. The insights presented herein elucidate how adeptly harnessing A/B statistics can yield significant enhancements in user experience and overall profitability. By grasping the intricacies of A/B testing—from statistical significance to considerations of sample size—DTC brands can make informed decisions that profoundly influence their success.

Key arguments underscore the necessity of concentrating on high-impact elements, such as:

- Call-to-action buttons

- Email subject lines

These elements can substantially affect engagement rates. Moreover, the establishment of a robust hypothesis and the avoidance of prevalent testing pitfalls are crucial for brands to derive meaningful insights from their experiments. Case studies exemplify how strategic A/B testing has resulted in remarkable sales growth and improved average order values, highlighting the concrete advantages of a data-driven approach.

Ultimately, A/B testing transcends mere technicality; it is an integral facet of a comprehensive conversion rate optimization strategy. DTC brands are urged to adopt this methodology, persistently analyze outcomes, and adjust their strategies accordingly. By dedicating themselves to rigorous A/B testing practices, companies can unlock their full potential, ensuring sustained growth and success in an increasingly competitive landscape.

Frequently Asked Questions

What is the significance of A/B testing for DTC brands?

A/B testing is crucial for DTC brands as it can lead to conversion rate enhancements of up to 30%. Currently, 77% of organizations are engaging in A/B tests, which help refine marketing strategies and improve user experiences.

Which elements should DTC brands prioritize in A/B testing?

DTC brands should focus on high-impact elements such as call-to-action buttons and email subject lines to significantly increase engagement and sales.

Can you provide an example of the impact of A/B testing on conversion rates?

Yes, straightforward email subject lines can result in 541% more responses compared to more creative ones. Additionally, companies like T.M. Lewin reported a 50% boost in conversion rates through clear returns messaging, and Swiss Gear saw a 137% increase during peak shopping seasons by optimizing their product detail pages.

What is the role of statistical significance in A/B testing?

Statistical significance helps determine if the outcomes of an A/B test are due to the changes made or random variation. A common benchmark for significance is a p-value of less than 0.05, indicating less than a 5% probability that the observed differences occurred by chance.

How do confidence intervals contribute to A/B test results?

Confidence intervals define the range within which the true effect of a change is expected to fall, typically at a 95% confidence level. This allows DTC companies to assess the reliability of their results and make informed decisions based on solid data.

What are Type I and Type II errors in the context of A/B testing?

Type I errors occur when a true null hypothesis is incorrectly rejected, while Type II errors happen when a false null hypothesis is not rejected. Understanding these errors is crucial for DTC companies to enhance success rates and profitability.

What sample size is recommended for reliable A/B testing results?

It is recommended that companies aim for a minimum of 200-300 transactions per variation in A/B tests. Larger sample sizes reduce variability and enhance the precision of confidence intervals.

How can DTC companies maximize profitability through A/B testing?

By integrating insights from A/B testing into a comprehensive conversion rate optimization (CRO) strategy that aligns paid ads and landing pages, DTC companies can maximize profitability and drive sustainable growth.

FAQs