Overview

This article presents a structured approach to A/B testing, underscoring its critical role for direct-to-consumer (DTC) brands in refining marketing strategies and elevating customer experiences. By outlining a systematic process that includes:

- Goal identification

- Hypothesis formulation

- Result analysis

it demonstrates how methodical A/B experimentation fosters informed decision-making. This, in turn, enhances conversion rates and propels overall brand success.

Introduction

A/B testing has emerged as a pivotal strategy for direct-to-consumer (DTC) brands aiming to enhance their marketing effectiveness and drive sales. By systematically comparing variations of marketing assets, companies can uncover insights that lead to improved conversion rates and increased customer engagement. However, the process is not without its challenges.

How can brands ensure they are conducting these tests effectively and avoiding common pitfalls? This article delves into the essential steps for executing successful A/B tests, highlighting the significant benefits and potential mistakes that DTC brands must navigate to thrive in a competitive landscape.

Define A/B Testing and Its Importance for DTC Brands

The split test A/B, often known as A/B experimentation, serves as a critical method for comparing two versions of a webpage, advertisement, or other marketing assets to determine which performs more effectively. For direct-to-consumer (DTC) companies, split test A/B experimentation is vital for refining marketing strategies, optimizing landing pages, and personalizing customer experiences. By systematically assessing variations, businesses can make informed decisions that lead to improved conversion rates and increased revenue.

For example, a DTC company may evaluate two different headlines on a product page to identify which drives more sales. The insights gained from A/B analysis significantly enhance a company's ability to engage its audience and maximize profitability without incurring additional advertising costs. Furthermore, using a split test A/B evaluation reduces uncertainty in marketing by relying on data rather than opinions or trends, allowing companies to validate hypotheses and prioritize impactful changes.

This iterative process not only enhances outcomes over time but also contributes to a (ROI) when compared to alternative marketing methods. Additionally, A/B experimentation can elevate customer satisfaction by fine-tuning various touchpoints throughout the customer journey. This establishes split test A/B as an indispensable strategy for DTC brands navigating the complexities of consumer behavior and market demands.

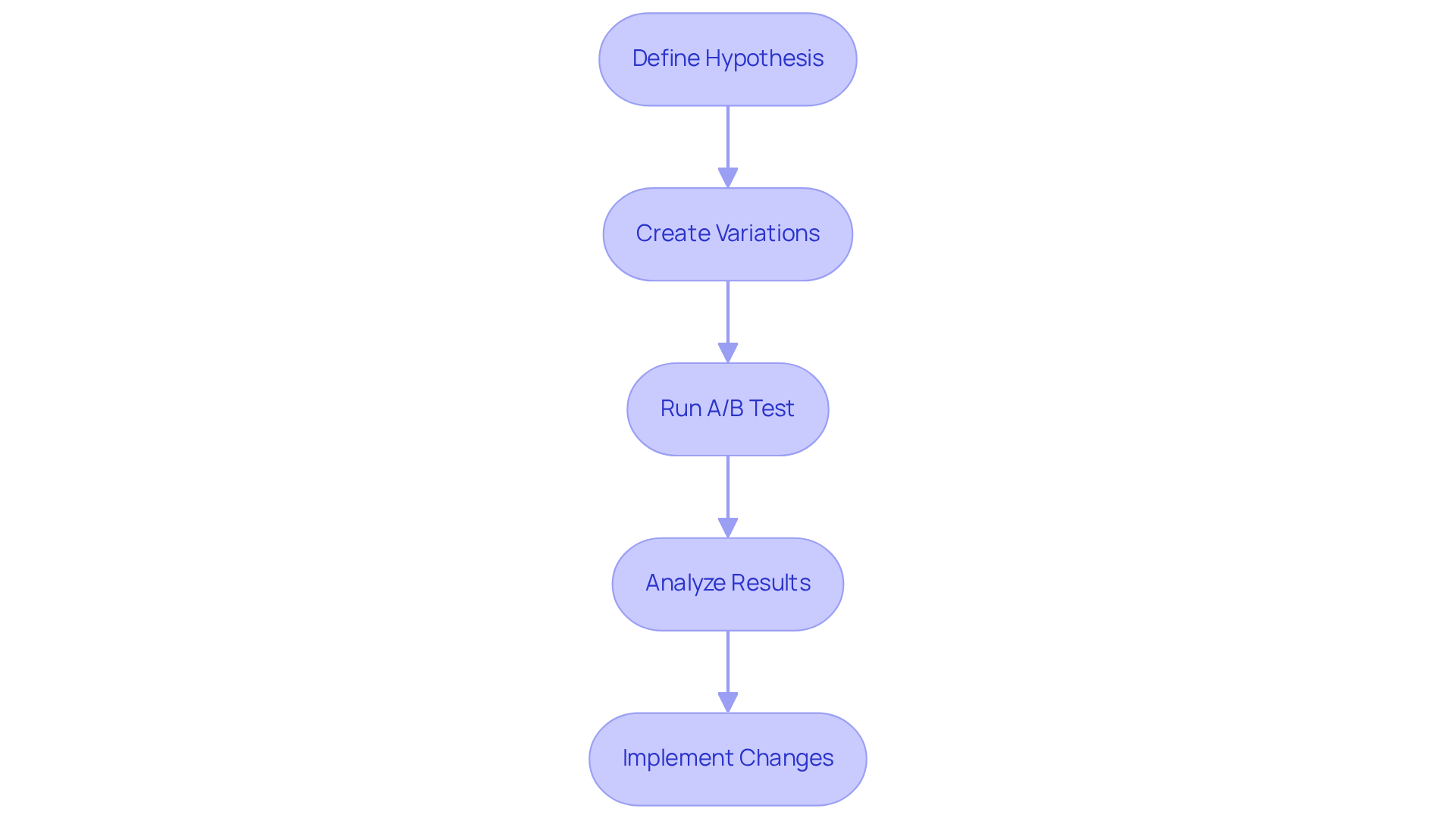

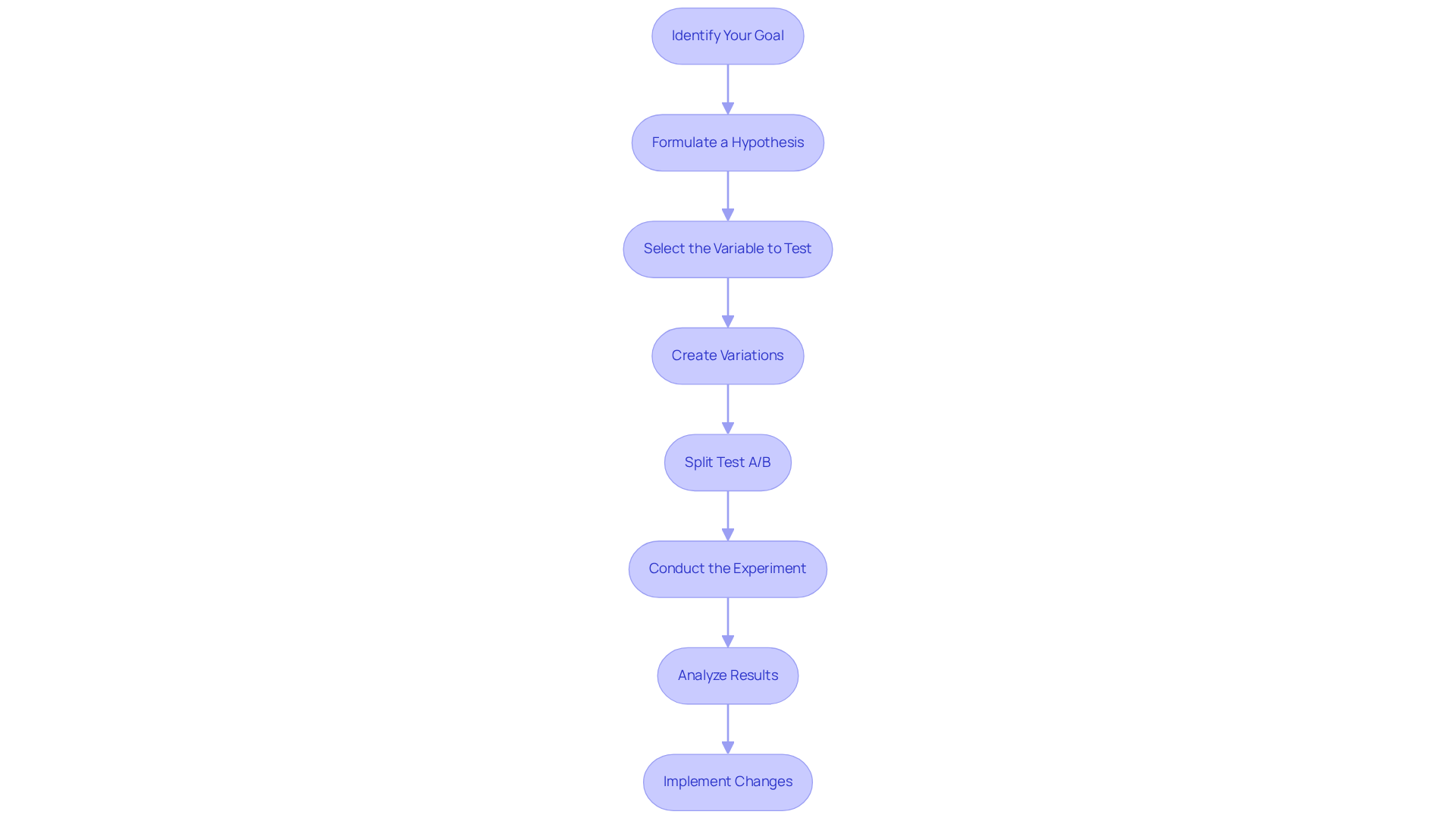

Step-by-Step Process for Conducting A/B Tests

- Identify Your Goal: Begin by clearly defining your objective for the A/B test. Whether your aim is to increase conversion rates, improve click-through rates, or enhance user engagement, having a precise goal will steer your evaluation process effectively.

- Formulate a Hypothesis: Based on your defined goal, craft a hypothesis regarding the changes that may drive better performance. For instance, stating, 'Changing the call-to-action button color from blue to green will increase clicks,' provides a clear direction. A well-articulated hypothesis is critical, as A/B experiments can boost conversion rates by as much as 30%.

- Select the Variable to Test: Choose a single element for testing at a time, such as headlines, images, or button placements. Isolating one variable allows you to accurately assess its impact on performance. Ensure that your sample size is sufficiently large to yield statistically significant results.

- Create Variations: Develop two versions of the asset under evaluation: the original (Version A) and the modified version (Version B). It is essential that the only difference between the two is the variable being examined.

- Split Test A/B: You should randomly divide your audience into two groups, where one group views Version A and the other views Version B. This randomization is vital for obtaining unbiased results and ensuring .

- Conduct the Experiment: Launch your A/B experiment and allow it to run for an adequate duration to gather significant data. The timeframe will depend on your traffic levels and the significance of the changes being tested. Avoid conducting evaluations during atypical periods unless you are specifically assessing those scenarios.

- Analyze Results: Once the test concludes, analyze the data to determine which version performed better. Focus on metrics relevant to your goal, such as conversion rates or engagement levels. For example, [Parah Group has achieved a 36% increase in ROI on advertisements for clients through effective A/B evaluation strategies.](https://parahgroup.com)

- Implement Changes: If one version significantly outperforms the other, proceed to implement the winning variation. In cases of inconclusive results, consider further examination or refining your hypothesis. As noted by industry experts, A/B evaluation is fundamental for enhancing customer experience and facilitating data-driven decisions.

Identify and Avoid Common A/B Testing Mistakes

- Establishing a Clear Hypothesis: A/B experiments must commence with a precisely defined hypothesis. This clarity is crucial for identifying the metrics to measure and determining what defines success. By setting specific objectives, the effectiveness of split test A/B evaluations is significantly enhanced, ensuring that experiments align with the desired outcomes.

- Isolating Variables in Testing: Simultaneously testing multiple changes can obscure which specific alteration is responsible for any observed performance differences. To effectively assess the impact of modifications, use a split test A/B by focusing on a during each examination. This approach facilitates clearer insights into what drives conversion increases.

- Ensuring Sufficient Sample Size: Conducting evaluations with an inadequate number of participants can yield inconclusive results. Aim for a sample size that achieves statistical significance in your split test A/B, typically requiring at least 100 conversions per variation. This practice guarantees that findings are robust and not merely the result of random fluctuations.

- Considering External Influences: External factors, such as seasonal trends or concurrent marketing campaigns, can distort results. It is imperative to account for these variables when designing assessments to effectively split test A/B, thereby avoiding misinterpretation of data and ineffective optimization strategies.

- Allowing Adequate Evaluation Duration: Concluding an assessment prematurely can lead to erroneous assumptions about performance. Allow evaluations to run for a sufficient duration—ideally 3 to 4 weeks—to gather reliable data that accurately reflects genuine user behavior over time, especially when conducting a split test A/B.

- Segmenting Your Audience Effectively: Failing to segment your audience can lead to misleading conclusions. Different demographics may respond variably to changes, making it advantageous to split test A/B across various segments to uncover nuanced insights.

- Conducting a thorough analysis of the results is essential following the completion of an evaluation, especially when performing a split test A/B. Neglecting to perform a comprehensive review can result in missed opportunities for improvement and a lack of understanding of user behavior, which is critical for future optimization efforts.

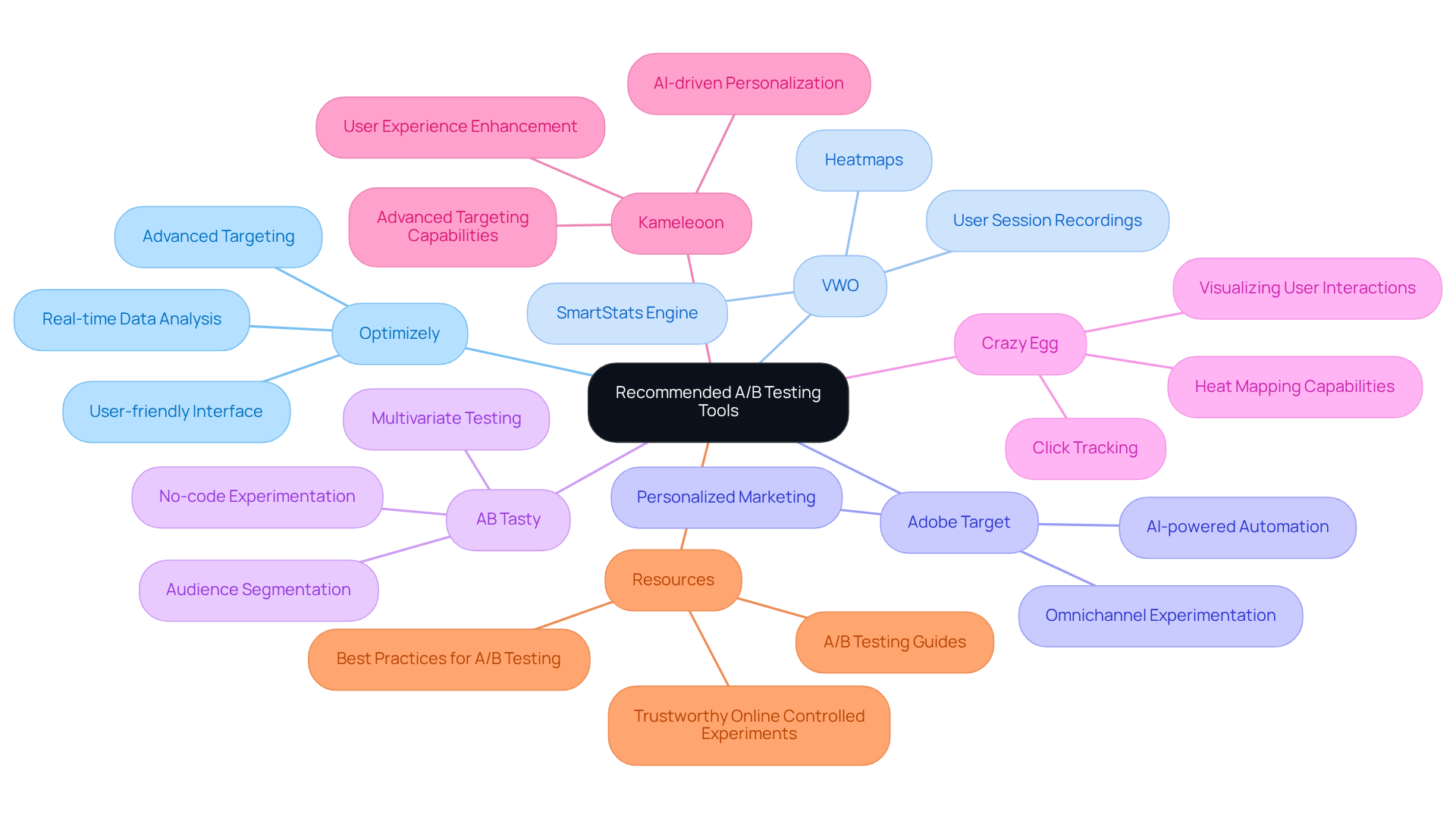

Recommended Tools and Resources for Effective A/B Testing

- Optimizely: Renowned for its versatility, Optimizely stands out with a user-friendly interface and advanced features tailored for conducting split test A/B across websites and mobile applications. Its real-time data analysis capabilities render it a favored choice for companies striving for accuracy in their evaluation efforts. Case studies have demonstrated that organizations leveraging Optimizely have achieved significant enhancements in conversion rates, underscoring its effectiveness in real-world applications.

- VWO (Visual Website Optimizer): VWO combines with heatmaps and user session recordings, delivering invaluable insights into user behavior. This comprehensive approach empowers companies to understand how design modifications influence conversion rates, facilitating informed decision-making.

- Adobe Target: Specifically designed for larger DTC companies, Adobe Target excels in personalized marketing and allows users to split test A/B effectively. Its advanced features support omnichannel experimentation, enabling brands to deliver tailored experiences that drive conversions. Companies utilizing Adobe Target have reported measurable increases in engagement and sales.

- AB Tasty: This platform offers a robust suite of evaluation and personalization tools, making it ideal for companies aiming to enhance user experiences. AB Tasty's no-code experimentation feature allows marketers to split test A/B independently, streamlining the evaluation process.

- Crazy Egg: Known for its heat mapping capabilities, Crazy Egg also provides tools to split test A/B experiments that assist companies in visualizing user interactions. This dual functionality is instrumental in optimizing website performance and user engagement, making it a valuable resource for small to medium-sized businesses.

- Kameleoon: Harnessing the power of AI, Kameleoon serves as a formidable platform for split test A/B experimentation and personalization that enhances user experiences while boosting conversions. Its advanced targeting capabilities make it particularly suitable for companies seeking to refine their marketing strategies.

- Resources: For deeper insights into A/B experimentation methodologies, consider delving into 'Trustworthy Online Controlled Experiments' by Ron Kohavi, which offers a comprehensive understanding of effective practices. Additionally, it is crucial for brands to select an A/B evaluation tool that aligns with their business objectives and growth strategies, ensuring that their investment yields optimal outcomes. Remember that implementing [A/B testing tools](https://convertize.com/ab-testing-tools) necessitates a financial and time commitment, so thoughtful consideration is paramount before proceeding.

Conclusion

Implementing A/B testing stands as a fundamental strategy for direct-to-consumer (DTC) brands intent on amplifying their marketing efficacy and driving sales. By systematically contrasting various versions of marketing assets, brands can harness data-driven insights to optimize customer interactions and refine their overarching strategy. This method not only elevates conversion rates but also cultivates a profound understanding of consumer behavior, empowering brands to tailor their offerings to align with customer expectations.

In this guide, we have delineated key steps for executing successful A/B tests, encompassing the identification of goals, formulation of hypotheses, and analysis of results. The significance of isolating variables, ensuring sufficient sample sizes, and circumventing common pitfalls that may distort results has been underscored. Furthermore, tools such as Optimizely and VWO are recommended as valuable resources for implementing effective A/B testing, enabling brands to make informed decisions that yield measurable enhancements in performance.

Ultimately, embracing A/B testing represents a proactive strategy for DTC brands striving to excel in a competitive environment. By committing to this iterative process, brands can not only refine their marketing strategies but also enhance customer satisfaction and loyalty. The insights derived from A/B testing extend beyond immediate gains; they constitute a long-term investment in comprehending and responding to the evolving preferences of consumers, paving the way for sustained success.

Frequently Asked Questions

What is A/B testing?

A/B testing, also known as A/B experimentation, is a method for comparing two versions of a webpage, advertisement, or other marketing assets to determine which one performs more effectively.

Why is A/B testing important for direct-to-consumer (DTC) brands?

A/B testing is vital for DTC brands as it helps refine marketing strategies, optimize landing pages, and personalize customer experiences, leading to improved conversion rates and increased revenue.

How does A/B testing work?

A/B testing works by systematically assessing variations of marketing assets, such as different headlines or designs, to gather data on which version drives better performance, such as higher sales.

What are the benefits of using A/B testing for marketing decisions?

A/B testing allows businesses to make informed decisions based on data rather than opinions, reduces uncertainty in marketing, validates hypotheses, and prioritizes impactful changes.

How does A/B testing contribute to return on investment (ROI)?

A/B testing enhances outcomes over time and can lead to a higher ROI compared to alternative marketing methods by optimizing strategies and maximizing profitability.

In what ways can A/B testing improve customer satisfaction?

A/B testing can elevate customer satisfaction by fine-tuning various touchpoints throughout the customer journey, ensuring that marketing efforts align with consumer preferences.

What role does A/B testing play in navigating consumer behavior and market demands?

A/B testing is an indispensable strategy for DTC brands as it helps them understand and adapt to the complexities of consumer behavior and market demands through data-driven insights.

FAQs